How does entropy change? Thermodynamic entropy. circular process. Carnot cycle

In most chemical processes, two phenomena occur simultaneously: the transfer of energy and a change in the ordered arrangement of particles relative to each other. All particles (molecules, atoms, ions) tend to move randomly, so the system tends to move from a more ordered state to a less ordered one. A quantitative measure of disorder (chaoticity, disorder) of a system is entropy S . For example, if a gas cylinder is connected to an empty vessel, then the gas from the cylinder will be distributed over the entire volume of the vessel. The system will move from a more ordered state to a less ordered one, which means that the entropy will increase (ΔS > 0).

It is unlikely that the concept of physics is so often used outside of physics - and therefore often deviates from its actual meaning - as entropy. The concept has a very narrow meaning. The specific definition of this physical quantity was made by the Austrian physicist Ludwig Boltzmann in the second half of the year. He focused on the microscopic behavior of a fluid, i.e. a gas or liquid. The disordered motion of atoms or molecules was understood as heat, which was decisive for its definition.

AT closed system with a fixed volume and a fixed number of particles, Boltzmann pointed out, the entropy is proportional to the logarithm of the number of microstages in the system. In microstates, he understood all the possibilities of stacking molecules or atoms of a liquid enclosed in a liquid. His formula defines entropy as a measure of the "arrangement freedom" of molecules and atoms: if the number of acceptable microstates increases, entropy increases. There are fewer possibilities for how fluid particles can form, less entropy.

Entropy always increases (ΔS > 0) during the transition of the system from a more ordered state to a less ordered one: during the transition of a substance from a crystalline state to a liquid and from a liquid to a gaseous state, with an increase in temperature, with dissolution and dissociation crystalline substance etc.

During the transition of the system from a less ordered state to a more ordered state, the entropy of the system decreases (ΔS< 0), например при конденсации, кристаллизации, понижении температуры и т.д.

Boltzmann's formula is often interpreted as if entropy is equivalent to "disorder". However, this simplistic picture is easily misleading. Bath foam is an example of this: when the bubbles burst and the surface of the water becomes smooth, the upset seems to decrease. But entropy does not! In fact, it even increases because after the foam burst, the possible space for liquid molecules is no longer limited by the outer membranes of the vesicles, so the number of microstates ingested has increased.

What is entropy

Boltzmann's definition allows us to understand one side of the term, but entropy also has another macroscopic aspect, which the German physicist Rudolf Clausius already discovered a few years ago. The steam engine was invented, the classic heat engine. Heat engines convert the temperature difference into mechanical work. Physicists then tried to understand the principles that govern these machines. Researchers have found that only a few percent of thermal energy can be converted into mechanical energy.

In thermodynamics, the change in entropy is related to heat by the expression:

dS = δQ/T or ΔS=ΔH / T

The entropies of substances, as well as their enthalpies of formation, are referred to as standard conditions. standard entropy 1 mole is denoted S 0 298 , this is a reference value, measured in J / (K mol) (Appendix 2).

For example, the standard entropy

The rest was somehow lost - without understanding the reason. The theory of thermodynamics, apparently, did not have a physical concept that took into account the different valences of energy and limited the possibility of converting thermal energy into mechanical energy. The solution took the form of entropy. Clausius introduced the term as a thermodynamic variable and defined it as a macroscopic measure of a property that limits the usability of energy.

According to Clausius, the change in the entropy of a system depends on the heat supplied and the temperature. Along with heat, entropy is always transferred, so its derivation. In addition, Clausius came to the conclusion that entropy in closed systems, unlike energy, is not a conservation factor. This was the second major moment of thermodynamics in physics.

ice……………..S 0 298 = 39.7 J/(K mol);

water…………….S 0 298 = 70.08 J/(K mol);

water vapor ... ..S 0 298 \u003d 188.72 J / (K mol),

those. entropy increases - the degree of disorder of a substance in gaseous state more.

For graphite S 0 298 = 5.74 J / (K mol), for diamond S 0 298 = 2.36 J / (K mol), since substances with an amorphous structure have more entropy than with a crystalline one.

"In a closed system, entropy never decreases." Therefore, entropy always increases or remains constant. This introduces a timeline into closed-loop physics, since thermodynamic processes in closed systems are irreversible with increasing entropy.

Theory of laboratory work

The process would be reversible if the entropy remained constant. But this is only theoretically possible. All real processes are irreversible. According to Boltzmann, one can also say that the number of possible microstates increases at any time. This microscopic interpretation extends the thermodynamic-macroscopic interpretation of Clausius. Entropy finally solved the mystery of vanishing energy in heat engines. Part of the thermal energy is finally removed from the mechanical usability and released again, because the entropy in closed systems should not decrease.

Entropy S 0 298, J / (K mol) grows with the complication of molecules, for example:

In the course of chemical reactions, the entropy also changes, so, with an increase in the number of molecules of gaseous substances, the entropy of the system increases, and with a decrease, it decreases.

The change in the entropy of the system as a result of the processes is determined by the equation:

General information about thermodynamics

Since the results of Clausius and Boltzmann, entropy has also moved to other areas of physics. Even outside of physics, they have been attacked, at least as a mathematical concept. With this size, he characterized the loss of information in telephone transmissions.

The ideal cycle of a Carnot heat engine

Entropy also plays a role in chemistry and biology: in some open systems new structures can form as long as the entropy is released. These should be so-called dissipative systems in which energy is converted into thermal energy. This theory of structural formation comes from the Belgian physicist-chemist Ilya Prigogine. To date, a work has been published in which new aspects are added to the physical sphere of the concept.

∆S 0 = ∑ ∆S 0 cont. - ∑ ΔS 0 ref.

For example: in reaction

C (graphite) + CO 2 (g) \u003d 2CO (g); ΔS 0 298 = 87.8 J/K

On the left side of the equation, there is 1 mol of gaseous substance CO 2 (g), and on the right - 2 mol of gaseous substance 2CO (g), which means that the volume of the system increases and the entropy increases (ΔS > 0).

With an increase in entropy (ΔS > 0), reactions also occur:

![]()

Why is the efficiency of heat engines limited? Rudolf Clausius solved this riddle by introducing the concept of entropy. The physicist considered the circular process of an idealized heat engine in which expansion and contraction alternate under isothermal and isentropic conditions. Correlating the conservation of energy in the second basic set of thermodynamics, the following inequality for efficiency leads to this so-called Carnot process.

Thus, the maximum achievable efficiency of a heat engine is limited by thermodynamic laws. For example, if the machine is running between 100 and 200 degrees Celsius, the maximum achievable efficiency is about 21 percent. Two additional useful insights can be derived mathematically from the conservation of energy and the second basic set of thermodynamics: when doing work, heat can only travel from a cold to a warm body—refrigerators and heat pumps need a power supply.

2 H 2 O (g) \u003d 2 H 2 (g) + O 2 (g)

2 H 2 O 2 (g) \u003d 2 H 2 O (g) + O 2 (g)

CaCO 3 (c) \u003d CaO (c) + CO 2 (g), ΔS 0 298 \u003d 160.48 J / K

In the reaction of the formation of ammonia

N 2 (g) + 3 H 2 (g) \u003d 2 NH 3 (g); ΔS 0 298 \u003d - 103.1 J / K

The volume of the system decreases, and therefore the entropy also decreases (ΔS< 0).

With decreasing entropy (ΔS< 0) протекают реакции:

3 H 2 (g) + N 2 (g) \u003d 2 NH 3 (g)

2 H 2 (g) + O 2 (g) \u003d 2 H 2 O (g)

Secondly, no work can be done with the heated tank constant temperature. For this purpose, a heat flow between the reservoirs is always necessary. different temperatures. The term entropy is a new formation of Rudolf Clausius from Greek words and is roughly translated into "conversion content".

The formula says that entropy is always transferred along with heat. The definition of Boltzmann's entropy is based on the understanding of heat as the disordered movement of atoms or molecules. Microstates are the possibilities of how the molecules or atoms of a blocked fluid can be arranged. Entropy is a vast one of. Any equilibrium state of a thermodynamic system can be uniquely assigned an entropy value. AT statistical physics entropy is a measure of the amount of phase space that a system can reach.

In reactions between solids and in processes in which the amount of gaseous substances does not change, the entropy practically does not change and its change is determined by the structure of the molecules or the structure of the crystal lattice, for example:

C (graphite) + O 2 (g) \u003d CO 2 (g), ΔS 0 298 \u003d 2.9 J / K

Al (c) + Sb (c) \u003d AlSb (c), ΔS 0 298 \u003d - 5.01 J / K

Example #1. Calculate and explain the entropy change for a process

In the framework of classical thermodynamics, entropy is a measure for. This entropy change is positive with heat input, negative with heat removed. Clausius also described entropy multiplication without heat transfer by irreversible processes in an isolated case with inequality.

History of the term "entropy"

![]()

The equation is a form of the second principal set of thermodynamics. With the process shown in the figure in an adiabatic system, where only the initial state and final state can be specified, this is not the case. However, for an ideal gas, the entropy difference can be calculated in a simple way through a reversible isothermal displacement process as described in the Examples section. In addition to energy, entropy is the most important concept, and it is useful to go to the starting point of this science for a better understanding and repetition of development.

2SO 2 (g) + O 2 (g) \u003d 2SO 2 (g)

Solution.

ΔS 0 \u003d 2 S 0 SO 3 (g) - (2 S 0 SO2 (g) + S 0 O 2 (g)) \u003d 2 256.23 - (2 248.1 + 205.04) \u003d - 188 .78 J/K.

Since ΔS< 0, энтропия уменьшается вследствии уменьшения объема системы, т.е. уменьшения числа молей газообразных веществ (в левой части 3 моль газообразных веществ, в правой – 2 моль).

Example #2. Calculate and explain the entropy change for a process:

The machine was able to complete its task, but it took a lot of fuel. At this time, the connection between energy and heat was completely obscure, and Julius Mayer still had to publish it over 130 years later. Inspired by his father's work in mills, Carnot described a steam engine in a cyclic process in which heat flows from a hot source to a cold outlet, thereby doing work.

In his original work, Carnot expressed the view that heat is a kind of imponderable substance that always flows from a hotter to a colder body, since water always moves downward. And just like falling water, heat can still work, the higher the gradient, in particular, the machine cannot do more work than the heat was supplied. Carnot corrected himself later and had already recognized the equivalence of heat and energy ten years before Mayer, Joule and Thomson. He meanwhile was ahead of his time, but, unfortunately, he died young, and his work went unnoticed at first glance.

Solution. Let's write out from App. 2 values standard entropies substances

According to the corollary of Hess' law,

ΔS 0 \u003d (2 S 0 CuO (c) + S 0 SO 2 (g)) - (S 0 Cu2S (c) + 2 S 0 O 2 (g)) \u003d (2 42.64 + 248.1) – (119.24 + 205.04) = 9.1 J/K.

The entropy slightly increases, this is due to the complication of the structure of the SO 2 (g) molecule compared to the O 2 (g) molecule.

Example #3. Determine the entropy change for the process:

Only Clausius formulated the connection between the temperature difference - the source and the sink - with the efficiency of the heat exchanger and that this efficiency cannot be exceeded by another heat engine, since heat would otherwise flow spontaneously from a cold to a hot body. The impossibility of such a process in nature is now called Clausius, formulated by her with a circular process.

There is no cycling machine whose only effect is to transfer heat from the cooler to a warmer reservoir. Simply put, the main claim states that temperature differences cannot spontaneously increase in nature. Clausius could demand an offer with this.

C (graphite) + O 2 (g) \u003d CO 2 (g)

Solution: Let's write out from App. 2 standard entropy values

ΔS 0 \u003d S 0 CO2 (g) - (S 0 C (g) + S 0 O2 (g)) \u003d 213.68 - (5.74 + 205.04) \u003d 2.9 J / K.

Since ΔS > 0, the entropy increases slightly during the reaction. The volume of the system does not change, but the entropy increases due to the complexity of the structure of the CO 2 molecule compared to the O 2 molecule.

Thermodynamics as a function of state

For any circular process. The equivalence sign applies only to reversible processes. With this Clausius theorem the size is obvious. Clausius called this quantity entropy, and over time it has become customary to formulate the main sentence directly with entropy, which in no way leads to a deeper understanding. Only decades later, with its statistical mechanics, could an explanation of entropy be found as a measure of the achievable microstates of a system. Heat is distributed randomly throughout the atoms and molecules, distributing energy and going from hot to cold because the way back is simply too unlikely.

Gibbs energy

Spontaneously, i.e. without the expenditure of energy from the outside, the system can only go from a less stable state to a more stable one.

AT chemical processes two factors at the same time:

The tendency for the system to transition to the state with the lowest internal energy, which reduces the enthalpy of the system ( ∆H → min);

The tendency for a system to move to a more disordered state, which increases the entropy ( ∆S → max).

The change in the energy of the system is called enthalpy factor , it is expressed quantitatively in terms of the thermal effect of the reaction ΔH. It reflects the tendency to form bonds and enlarge particles.

The increase in entropy in a system is called entropy factor , it is expressed quantitatively in units of energy (J) and is calculated as T ΔS. It reflects a tendency towards a more random arrangement of particles, towards the disintegration of substances into simpler particles.

The total effect of these two opposite tendencies in processes occurring at constant T and P , is reflected by a change in the isobaric-isothermal potential or free energy Gibbs ΔG and is expressed by the equation:

∆G = ∆H – T ∆S

At constant pressure and temperature (isobaric-isothermal process), reactions proceed spontaneously in the direction of decreasing the Gibbs energy.

By the nature of the change in the Gibbs energy, one can judge the fundamental possibility or impossibility of implementing the process.

If a ΔG< 0 , the reaction can proceed spontaneously in forward direction. The greater the decrease in the enthalpy factor and the increase in the entropy factor, the stronger the tendency of the system to proceed the reaction. The Gibbs energy in the initial state of the system is greater than in the final state.

If the Gibbs energy ∆G > 0, the reaction cannot proceed spontaneously in the forward direction.

If a ∆G=0, the system is in a state of chemical equilibrium, the enthalpy and entropy factors are equal (ΔH=T ΔS). The temperature at which ∆G = 0, is called reaction start temperature : T = ∆H/∆S. At this temperature, both forward and reverse reactions are equally likely. The possibility (or impossibility) of a spontaneous reaction at various ratios the values of ΔH and ΔS are presented in the table.

Example #1.

Cu 2 S (c) + 2 O 2 (g) \u003d 2 CuO (c) + SO 2 (g)

Specify the possibility of its occurrence in standard conditions in closed system.

Solution: Gibbs free energy change in chemical reaction under standard conditions (T \u003d 298 K, P \u003d 101325 Pa) is calculated by the equation ΔG 0 \u003d ΔH 0 - T ΔS 0.

Thermal effect of a chemical reaction ΔH 0 = - 545.5 kJ (see calculation above). Entropy change in a chemical reaction ΔS 0 = -9.1 J/K (see calculation above).

When calculating ΔG 0, it must be taken into account that ΔH 0 is expressed in kJ, and ΔS 0 in J / K, for this, ΔS 0 must be multiplied by 10 -3.

Gibbs free energy change in a chemical reaction

ΔG 0 \u003d ΔH 0 - T ΔS 0 \u003d - 545.5 - \u003d -548.21 kJ.

Since ΔG 0< 0, следовательно, в стандартных условиях самопроизвольное протекание данной химической реакции в прямом направлении возможно.

Example #2. Based on the values of ∆H 0 and ∆S 0, calculate ∆G 0 of the reaction

MgCO 3 (c) \u003d MgO (c) + CO 2 (g)

Indicate the possibility of its flow under standard conditions in a closed system. At what temperature will calcium carbonate begin to decompose?

Solution: We write out from Appendixes 1 and 2 the values of standard etalpies ∆H0 f, kJ/mol and entropy S 0 , J/(K mol):

Calculate the change in enthalpy and entropy

∆H0= (∆H0 f, MgО(c) + ∆H0f, CO 2 (g)) - ∆H0f, MgСО 3 (k) \u003d [-601.24 + (-393.5)] - (-1096.21) \u003d 101.46 kJ;

ΔS 0 \u003d - S 0 MgSO 3 (k) \u003d - 112.13 \u003d 128.41 J / K.

ΔG 0 \u003d ΔH 0 - T ΔS 0 \u003d 101.46 - 298 128.41 10 -3 \u003d 63.19 kJ.

Since ΔG 0 > 0, therefore, the course of this reaction under standard conditions is impossible. Since ΔH > 0 and ΔS > 0, it can be concluded that the reaction can proceed spontaneously at a sufficiently high temperature.

Calculate the temperature at which the decomposition of magnesium carbonate begins:

T \u003d ΔH 0 / ΔS 0 \u003d 101.46 / (128.41 10 -3) \u003d 790.12 K (517.12 0 C).

At a temperature of 790.12 K, both forward and reverse reactions are equally probable. At temperatures above 790.12 K, a direct reaction will proceed, i.e. decomposition of magnesium carbonate will occur.

§6 Entropy

Usually, any process in which a system passes from one state to another proceeds in such a way that it is impossible to carry out this process in the opposite direction so that the system passes through the same intermediate states without any changes in the surrounding bodies. This is due to the fact that part of the energy is dissipated in the process, for example, due to friction, radiation, and so on. Almost all processes in nature are irreversible. In any process, some energy is lost. To characterize the dissipation of energy, the concept of entropy is introduced. ( The value of entropy characterizes the thermal state of the system and determines the probability of the implementation of this state of the body. The more likely the given state, the greater the entropy.) All natural processes are accompanied by an increase in entropy. Entropy remains constant only in the case of an idealized reversible process occurring in a closed system, that is, in a system in which there is no energy exchange with bodies external to this system.

Entropy and its thermodynamic meaning:

Entropy- this is such a function of the state of the system, the infinitesimal change of which in a reversible process is equal to the ratio of the infinitely small amount of heat introduced in this process to the temperature at which it was introduced.

In a final reversible process, the change in entropy can be calculated using the formula:

![]()

where the integral is taken from the initial state 1 of the system to the final state 2.

Since entropy is a state function, then the property of the integralis its independence from the shape of the contour (path) along which it is calculated, therefore, the integral is determined only by the initial and final states of the system.

- In any reversible process of change in entropy is 0

(1)

- Thermodynamics proves thatSsystem making an irreversible cycle increases

Δ S> 0 (2)

Expressions (1) and (2) apply only to closed systems, if the system exchanges heat with external environment, then itsScan behave in any way.

Relations (1) and (2) can be represented as the Clausius inequality

∆S ≥ 0

those. the entropy of a closed system can either increase (in the case of irreversible processes) or remain constant (in the case of reversible processes).

If the system makes an equilibrium transition from state 1 to state 2, then the entropy changes

![]()

where dU and δAwritten for a specific process. According to this formula, ΔSis determined up to an additive constant. physical meaning has not the entropy itself, but the difference of the entropies. Let us find the change in entropy in the processes of an ideal gas.

![]()

those. entropy changesS Δ S 1→2 of an ideal gas during its transition from state 1 to state 2 does not depend on the type of process.

Because for an adiabatic process δQ = 0, then ∆ S= 0 => S= const , that is, an adiabatic reversible process proceeds at constant entropy. Therefore, it is called isentropic.

At isothermal process (T= const ; T 1 = T 2 : )

![]()

In an isochoric process (V= const ; V 1 = V 2 ; )

![]()

Entropy has the property of additivity: the entropy of the system is equal to the sum of the entropies of the bodies included in the system.S = S 1 + S 2 + S 3 + ... The qualitative difference between the thermal motion of molecules and other forms of motion is its randomness, disorder. Therefore, to characterize thermal motion, it is necessary to introduce a quantitative measure of the degree of molecular disorder. If we consider any given macroscopic state of a body with certain average values of the parameters, then it is something other than a continuous change of close microstates that differ from each other in the distribution of molecules in different parts of the volume and in the energy distributed between the molecules. The number of these continuously changing microstates characterizes the degree of disorder of the macroscopic state of the entire system,wis called the thermodynamic probability of a given microstate. Thermodynamic Probabilitywsystem states is the number of ways in which a given state of a macroscopic system can be realized, or the number of microstates that implement a given microstate (w≥ 1, and mathematical probability ≤ 1 ).

We agreed to take the logarithm of its probability, taken with a minus sign, as a measure of the unexpectedness of an event: the unexpectedness of the state is equal to =-

According to Boltzmann, entropySsystems and thermodynamic probability are related as follows:

S=

where - Boltzmann constant (![]() ). Thus, entropy is determined by the logarithm of the number of states with which a given microstate can be realized. Entropy can be considered as a measure of the probability of the state of the t/d system. The Boltzmann formula allows us to give entropy the following statistical interpretation. Entropy is a measure of the disorder of a system. Indeed, than more number microstates realizing the given microstate, the more entropy. In the equilibrium state of the system - the most probable state of the system - the number of microstates is maximum, while entropy is also maximum.

). Thus, entropy is determined by the logarithm of the number of states with which a given microstate can be realized. Entropy can be considered as a measure of the probability of the state of the t/d system. The Boltzmann formula allows us to give entropy the following statistical interpretation. Entropy is a measure of the disorder of a system. Indeed, than more number microstates realizing the given microstate, the more entropy. In the equilibrium state of the system - the most probable state of the system - the number of microstates is maximum, while entropy is also maximum.

Because real processes are irreversible, then it can be argued that all processes in a closed system lead to an increase in its entropy - the principle of increasing entropy. In the statistical interpretation of entropy, this means that processes in a closed system go in the direction of increasing the number of microstates, in other words, from less probable states to more probable ones, until the state's probability becomes maximum.

§7 The second law of thermodynamics

The first law of thermodynamics, expressing the law of conservation of energy and transformation of energy, does not allow one to establish the direction of the flow of t/d processes. In addition, it is possible to imagine a set of processes that do not contradictIthe beginning of m / d, in which energy is stored, but in nature they are not realized. Possible formulations of the second beginning t/d:

1) the law of increase in the entropy of a closed system during irreversible processes: any irreversible process in a closed system occurs in such a way that the entropy of the system increases ΔS≥ 0 (irreversible process) 2) ΔS≥ 0 (S= 0 for reversible and ΔS≥ 0 for irreversible process)

In processes occurring in a closed system, entropy does not decrease.

2) From the Boltzmann formula S = , therefore, an increase in entropy means a transition of the system from a less probable state to a more probable one.

3) According to Kelvin: a circular process is not possible, the only result of which is the conversion of the heat received from the heater into work equivalent to it.

4) According to Clausius: a circular process is not possible, the only result of which is the transfer of heat from a less heated body to a more heated one.

To describe t/d systems at 0 K, the Nernst-Planck theorem (the third law of t/d) is used: the entropy of all bodies in equilibrium tends to zero as the temperature approaches 0 K

From the theorem Nernst-Planck follows thatC p= C v = 0 at 0 To

§8 Thermal and refrigeration machines.

Carnot cycle and its efficiency

From the formulation of the second law of t / d according to Kelvin, it follows that a perpetual motion machine of the second kind is impossible. (A perpetual motion machine is a periodically operating engine that does work by cooling one heat source.)

Thermostat- this is a t / d system that can exchange heat with bodies without changing the temperature.

Thermostat- this is a t / d system that can exchange heat with bodies without changing the temperature.

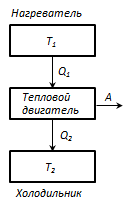

The principle of operation of a heat engine: from a thermostat with a temperature T 1 - heater, the amount of heat is taken away per cycleQ 1 , and thermostat with temperature T 2 (T 2 < T 1) - refrigerator, the amount of heat transferred per cycleQ 2 , while doing work BUT = Q 1 - Q 2

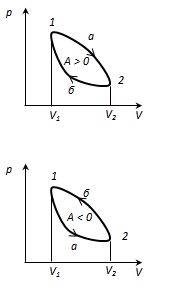

Circular process or cycle is the process in which the system, after passing through a series of states, returns to its original state. On the state diagram, the cycle is represented by a closed curve. The cycle carried out ideal gas, can be divided into processes of expansion (1-2) and compression (2-1), the work of expansion is positive BUT 1-2 > 0, becauseV 2

>

V 1

, the compression work is negative BUT 1-2 < 0, т.к.

V 2

<

V 1

. Therefore, the work done by the gas per cycle is determined by the area covered by the closed 1-2-1 curve. If positive work is done in a cycle (the cycle is clockwise), then the cycle is called direct, if it is a reverse cycle (the cycle occurs in a counterclockwise direction).

Circular process or cycle is the process in which the system, after passing through a series of states, returns to its original state. On the state diagram, the cycle is represented by a closed curve. The cycle carried out ideal gas, can be divided into processes of expansion (1-2) and compression (2-1), the work of expansion is positive BUT 1-2 > 0, becauseV 2

>

V 1

, the compression work is negative BUT 1-2 < 0, т.к.

V 2

<

V 1

. Therefore, the work done by the gas per cycle is determined by the area covered by the closed 1-2-1 curve. If positive work is done in a cycle (the cycle is clockwise), then the cycle is called direct, if it is a reverse cycle (the cycle occurs in a counterclockwise direction).

direct cycle used in heat engines - periodically operating engines that perform work due to heat received from outside. The reverse cycle is used in refrigerating machines- periodically operating installations, in which due to the work external forces heat is transferred to a body with a higher temperature.

As a result of a circular process, the system returns to its original state and, consequently, a complete change internal energy equals zero. ThenІ start t/d for circular process

Q= Δ U+ A= A,

That is, the work done per cycle is equal to the amount of heat received from outside, but

Q= Q 1 - Q 2

Q 1 - quantity heat received by the system,

Q 2 - quantity heat given off by the system.

Thermal efficiency for a circular process is equal to the ratio of the work done by the system to the amount of heat supplied to the system:

![]()

For η = 1, the conditionQ 2 = 0, i.e. the heat engine must have one source of heatQ 1 , but this contradicts the second law of t/d.

The reverse process to what happens in a heat engine is used in a refrigeration machine.

From thermostat with temperature T 2 the amount of heat is taken awayQ 2

and transmitted to the thermostat with temperatureT 1

, quantity of heatQ 1

.

From thermostat with temperature T 2 the amount of heat is taken awayQ 2

and transmitted to the thermostat with temperatureT 1

, quantity of heatQ 1

.

Q= Q 2 - Q 1 < 0, следовательно A< 0.

Without doing work, it is impossible to take heat from a less heated body and give it to a hotter one.

Based on the second law of t/d, Carnot deduced a theorem.

Carnot's theorem: of all periodically operating heat engines with the same heater temperatures ( T 1) and refrigerators ( T 2), the highest efficiency. have reversible machines. K.P.D. reversible machines for equal T 1 and T 2 are equal and do not depend on the nature of the working fluid.

A working body is a body that performs a circular process and exchanges energy with other bodies.

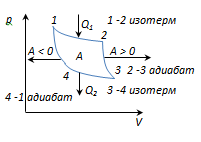

The Carnot cycle is the most economical reversible cycle, consisting of 2 isotherms and 2 adiabats.

1-2-isothermal expansion at T 1 heater; heat is supplied to the gasQ 1

and work is done

1-2-isothermal expansion at T 1 heater; heat is supplied to the gasQ 1

and work is done![]()

2-3 - adiabat. expansion, gas does workA 2-3 >0 over external bodies.

3-4 isothermal compression at T 2 refrigerators; heat is taken awayQ 2

and work is done![]() ;

;

4-1-adiabatic compression, work is done on the gas A 4-1 <0 внешними телами.

In an isothermal processU= const , so Q 1 = A 12

![]() 1

1

With adiabatic expansionQ 2-3 = 0, and gas work A 23 done with internal energy A 23 = - U

![]()

Quantity of heatQ 2 , given by the gas to the refrigerator during isothermal compression is equal to the work of compression BUT 3-4

![]() 2

2

Work of adiabatic compression

![]()

Work done in a circular process

A = A 12 + A 23 + A 34 + A 41 = Q 1 + A 23 - Q 2 - A 23 = Q 1 - Q 2

and is equal to the area of the 1-2-3-4-1 curve.

Thermal efficiency Carnot cycle

![]()

From the adiabatic equation for processes 2-3 and 3-4 we obtain

Then ![]()

![]()

those. efficiency The Carnot cycle is determined only by the temperatures of the heater and cooler. To increase the efficiency need to increase the difference T 1 - T 2 .

******************************************************* ******************************************************