Multiplication of matrices 2x2. Multiplication of a square matrix by a column matrix. Basic properties of the matrix product

This is one of the most common matrix operations. The matrix that is obtained after multiplication is called a matrix product.

Matrix product A m × n to matrix B n × k there will be a matrix Cm × k such that the matrix element C located in i-th line and j-th column, that is, the element c ij is equal to the sum products of elements i th row of the matrix A on the relevant elements j th column of the matrix B.

Process matrix multiplications is possible only if the number of columns of the first matrix is equal to the number of rows of the second matrix.

Example:

Is it possible to multiply a matrix by a matrix?

m =n, which means that you can multiply the data of the matrix.

If the matrices are interchanged, then, with such matrices, multiplication will no longer be possible.

m≠ n, so you can't do multiplication:

Quite often you can find tasks with a trick when the student is offered multiply matrices, whose multiplication is obviously impossible.

Please note that sometimes it is possible to multiply matrices in both ways. For example, for matrices, and possibly as multiplication MN, so is the multiplication N.M.

This is not a very difficult action. Matrix multiplication is best understood with specific examples, as The definition alone can be very confusing.

Let's start with the simplest example:

Must be multiplied by . First of all, we give the formula for this case:

- there is a good pattern here.

- there is a good pattern here.

Multiply by .

The formula for this case is: .

Matrix multiplication and result:

As a result, the so-called. null matrix.

It is very important to remember that the “rule of rearranging the places of terms” does not work here, since almost always MN≠ NM. Therefore, producing matrix multiplication operation under no circumstances should they be interchanged.

Now consider examples of matrix multiplication of the third order:

Multiply  on .

on .

The formula is very similar to the previous ones:

Matrix solution:  .

.

This is the same matrix multiplication, only a prime number is taken instead of the second matrix. As you might guess, this multiplication is much easier to perform.

An example of multiplying a matrix by a number:

![]()

Everything is clear here - in order to multiply a matrix by a number, it is necessary to multiply each element of the matrix sequentially by the specified number. In this case, 3.

Another useful example:

- matrix multiplication by a fractional number.

- matrix multiplication by a fractional number.

First of all, let's show what not to do:

When multiplying a matrix by a fractional number, it is not necessary to enter a fraction into the matrix, since this, first of all, only complicates further actions with the matrix, and secondly, it makes it difficult for the teacher to check the solution.

And, moreover, there is no need to divide each element of the matrix by -7:

.

.

What should be done in this case is to add a minus to the matrix:

.

.

If you had an example when all elements of the matrix would be divided by 7 without a remainder, then it would be possible (and necessary!) to divide.

AT this example it is possible and necessary to multiply all the elements of the matrix by ½, because each element of the matrix is divisible by 2 without a remainder.

Note: in the theory of higher mathematics school concept"division" is not. Instead of the phrase "this is divided by this", you can always say "this is multiplied by a fraction." That is, division is special case multiplication.

The main applications of matrices are related to the operation multiplication.

Given two matrices:

A - size mn

B - size n  k

k

Because the length of the row in matrix A coincides with the height of the column in matrix B, you can define the matrix C=AB, which will have dimensions m  k. Element

k. Element  matrix C, located in an arbitrary i-th row (i=1,…,m) and an arbitrary j-th column (j=1,…,k), by definition is equal to the scalar product of two vectors from

matrix C, located in an arbitrary i-th row (i=1,…,m) and an arbitrary j-th column (j=1,…,k), by definition is equal to the scalar product of two vectors from  :i-th row of matrix A and j-th column of matrix B:

:i-th row of matrix A and j-th column of matrix B:

Properties:

How is the operation of multiplying a matrix A by a number λ determined?

The product of A by a number λ is a matrix, each element of which is equal to the product of the corresponding element of A by λ. Consequence: The common factor of all matrix elements can be taken out of the matrix sign.

13. Definition of an inverse matrix and its properties.

Definition. If there are square matrices X and A of the same order that satisfy the condition:

where E is the identity matrix of the same order as the matrix A, then the matrix X is called reverse to the matrix A and is denoted by A -1 .

Properties of inverse matrices

Let us indicate the following properties of inverse matrices:

1) (A -1) -1 = A;

2) (AB) -1 = B -1 A -1

3) (A T) -1 = (A -1) T .

1. If an inverse matrix exists, then it is unique.

2. Not every nonzero square matrix there is an opposite.

14. Give the main properties of determinants. Check the property |AB|=|A|*|B| for matrices

A=

and B=

and B=

Properties of determinants:

1. If any row of the determinant consists of zeros, then the determinant itself is equal to zero.

2. When two strings are interchanged, the determinant is multiplied by -1.

3. The determinant with two identical strings is equal to zero.

4. The common factor of the elements of any row can be taken out of the sign of the determinant.

5. If the elements of a certain row of the determinant A are presented as the sum of two terms, then the determinant itself is equal to the sum of two determinants B and D. In the determinant B, the specified string consists of the first terms, in D - of the second terms. The remaining lines of determinants B and D are the same as in A.

6. The value of the determinant will not change if another string is added to one of the strings, multiplied by any number.

7. The sum of products of elements of any row and algebraic additions to the corresponding elements of another row is 0.

8. The determinant of the matrix A is equal to the determinant of the transposed matrix A m, i.e. the determinant does not change when transposed.

15. Define the modulus and argument of a complex number. Write in trigonometric form the numbers √3+i, -1+ i.

Each complex number z=a+ib can be assigned a vector (a,b)€R 2. The length of this vector, equal to √a 2 + b 2 is called complex number modulus z and is denoted by |z|. The angle φ between the given vector and the positive direction of the Ox axis is called complex number argument z and denoted by arg z.

Any complex number z≠0 can be represented as z=|z|(cosφ +isinφ).

This form of writing a complex number is called trigonometric.

√3+i=2(√3/2+1/2i)=2(cosπ/6+isinπ/6);

1+i=2(-√2/2+i√2/2)=2(cosπ/4+isinπ/4).

Each complex number Z = a + ib can be assigned a vector (a; b) belonging to R^2. The length of this vector, equal to the CV of a^2 + b^2, is called the modulus of the complex number and is denoted by the modulus Z. The angle between this vector and the positive direction of the Ox axis is called the argument of the complex number (denoted by arg Z).

Definition. The product of two matrices AND and AT called matrix FROM, whose element, located at the intersection i-th line and j-th column, is equal to the sum of products of elements i-th row of the matrix AND on the corresponding (in order) elements j-th column of the matrix AT.

This definition implies the formula for the matrix element C:

Matrix product AND to matrix AT denoted AB.

Example 1 Find the product of two matrices AND and B, if

![]() ,

,

.

.

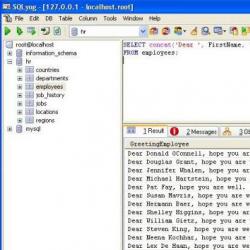

Decision. It is convenient to find the product of two matrices AND and AT write as in Fig. 2:

In the diagram, the gray arrows show the elements of which row of the matrix AND on the elements of which column of the matrix AT need to multiply to get the elements of the matrix FROM, and the colors of the matrix element C the corresponding elements of the matrices are connected A and B, whose products are added to obtain a matrix element C.

As a result, we obtain the elements of the product of matrices:

Now we have everything to write down the product of two matrices:

![]() .

.

Product of two matrices AB makes sense only when the number of columns of the matrix AND matches the number of matrix rows AT.

This important feature will be easier to remember if you use the following reminders more often:

There is another important feature of the product of matrices with respect to the number of rows and columns:

In the product of matrices AB the number of rows is equal to the number of matrix rows AND, and the number of columns is equal to the number of columns of the matrix AT .

Example 2 Find the number of rows and columns of a matrix C, which is the product of two matrices A and B the following dimensions:

a) 2 X 10 and 10 X 5;

b) 10 X 2 and 2 X 5;

Example 3 Find product of matrices A and B, if:

.

.

A B- 2. Therefore, the dimension of the matrix C = AB- 2 X 2.

Calculate matrix elements C = AB.

Found product of matrices: .

You can check the solution of this and other similar problems on matrix product calculator online .

Example 5 Find product of matrices A and B, if:

.

.

Decision. Number of rows in matrix A- 2, the number of columns in the matrix B C = AB- 2 X 1.

Calculate matrix elements C = AB.

![]()

The product of matrices will be written as a column matrix: .

You can check the solution of this and other similar problems on matrix product calculator online .

Example 6 Find product of matrices A and B, if:

.

.

Decision. Number of rows in matrix A- 3, the number of columns in the matrix B- 3. Therefore, the dimension of the matrix C = AB- 3 X 3.

Calculate matrix elements C = AB.

Found product of matrices:  .

.

You can check the solution of this and other similar problems on matrix product calculator online .

Example 7 Find product of matrices A and B, if:

.

.

Decision. Number of rows in matrix A- 1, the number of columns in the matrix B- 1. Consequently, the dimension of the matrix C = AB- 1 X 1.

Calculate the element of the matrix C = AB.

The product of matrices is a matrix of one element: .

You can check the solution of this and other similar problems on matrix product calculator online .

The software implementation of the product of two matrices in C++ is discussed in the corresponding article in the "Computers and Programming" block.

Matrix exponentiationRaising a matrix to a power is defined as multiplying a matrix by the same matrix. Since the product of matrices exists only when the number of columns of the first matrix is the same as the number of rows of the second matrix, only square matrices can be raised to a power. n th power of a matrix by multiplying the matrix by itself n once:

Example 8 Given a matrix. Find A² and A³ .

Find the product of the matrices yourself, and then see the solution

Example 9 Given a matrix ![]()

Find the product of the given matrix and the transposed matrix, the product of the transposed matrix and the given matrix.

Properties of the product of two matricesProperty 1. The product of any matrix A and the identity matrix E of the corresponding order both on the right and on the left coincides with the matrix A, i.e. AE = EA = A.

In other words, the role of the identity matrix in matrix multiplication is the same as the role of units in the multiplication of numbers.

Example 10 Make sure property 1 is true by finding the products of the matrix

![]()

to the identity matrix on the right and left.

Decision. Since the matrix AND contains three columns, then you need to find the product AE, where

-

-

the identity matrix of the third order. Let's find the elements of the work FROM = AE :

It turns out that AE = AND .

Now let's find the work EA, where E is the identity matrix of the second order, since the matrix A contains two rows. Let's find the elements of the work FROM = EA :

![]()

The product of matrices (C=AB) is an operation only for consistent matrices A and B, in which the number of columns of matrix A is equal to the number of rows of matrix B:

C ⏟ m × n = A ⏟ m × p × B ⏟ p × n

Example 1

Matrix data:

- A = a (i j) of dimensions m × n;

- B = b (i j) p × n

Matrix C , whose elements c i j are calculated by the following formula:

c i j = a i 1 × b 1 j + a i 2 × b 2 j + . . . + a i p × b p j , i = 1 , . . . m , j = 1 , . . . m

Example 2

Let's calculate the products AB=BA:

A = 1 2 1 0 1 2 , B = 1 0 0 1 1 1

Solution using the matrix multiplication rule:

A ⏟ 2 × 3 × B ⏟ 3 × 2 = 1 2 1 0 1 2 × 1 0 0 1 1 1 = 1 × 1 + 2 × 0 + 1 × 1 1 × 0 + 2 × 1 + 1 × 1 0 × 1 + 1 × 0 + 2 × 1 0 × 0 + 1 × 1 + 2 × 1 = = 2 3 2 3 ⏟ 2 × 2

B ⏟ 3 × 2 × A ⏟ 2 × 3 = 1 0 0 1 1 1 × 1 2 1 0 1 2 = 1 × 1 + 0 × 0 1 × 2 + 0 × 1 1 × 1 + 0 × 2 0 × 1 + 1 × 0 0 × 2 + 1 × 1 0 × 1 + 1 × 2 1 × 1 + 1 × 0 1 × 2 + 1 × 1 1 × 1 + 1 × 2 = 1 2 1 0 1 2 1 3 3 ⏟ 3×3

The product A B and B A are found, but they are matrices of different sizes: A B is not equal to B A.

Properties of matrix multiplication

Matrix multiplication properties:

- (A B) C = A (B C) - associativity of matrix multiplication;

- A (B + C) \u003d A B + A C - distributive multiplication;

- (A + B) C \u003d A C + B C - distributivity of multiplication;

- λ (A B) = (λ A) B

Check property #1: (A B) C = A (B C) :

(A × B) × A = 1 2 3 4 × 5 6 7 8 × 1 0 0 2 = 19 22 43 50 × 1 0 0 2 = 19 44 43 100,

A (B × C) = 1 2 3 4 × 5 6 7 8 1 0 0 2 = 1 2 3 4 × 5 12 7 16 = 19 44 43 100 .

Example 2

We check property No. 2: A (B + C) \u003d A B + A C:

A × (B + C) = 1 2 3 4 × 5 6 7 8 + 1 0 0 2 = 1 2 3 4 × 6 6 7 10 = 20 26 46 58,

A B + A C \u003d 1 2 3 4 × 5 6 7 8 + 1 2 3 4 × 1 0 0 2 \u003d 19 22 43 50 + 1 4 3 8 \u003d 20 26 46 58 .

Product of three matrices

The product of three matrices A B C is calculated in 2 ways:

- find A B and multiply by C: (A B) C;

- or find first B C, and then multiply A (B C) .

Multiply matrices in 2 ways:

4 3 7 5 × - 28 93 38 - 126 × 7 3 2 1

Action algorithm:

- find the product of 2 matrices;

- then again find the product of 2 matrices.

1). A B \u003d 4 3 7 5 × - 28 93 38 - 126 \u003d 4 (- 28) + 3 × 38 4 × 93 + 3 (- 126) 7 (- 28) + 5 × 38 7 × 93 + 5 (- 126 ) = 2 - 6 - 6 21

2). A B C = (A B) C = 2 - 6 - 6 21 7 3 2 1 = 2 × 7 - 6 × 2 2 × 3 - 6 × 1 - 6 × 7 + 21 × 2 - 6 × 3 + 21 × 1 = 2 0 0 3 .

We use the formula A B C \u003d (A B) C:

1). B C = - 28 93 38 - 126 7 3 2 1 = - 28 × 7 + 93 × 2 - 28 × 3 + 93 × 1 38 × 7 - 126 × 2 38 × 3 - 126 × 1 = - 10 9 14 - 12

2). A B C \u003d (A B) C \u003d 7 3 2 1 - 10 9 14 - 12 \u003d 4 (- 10) + 3 × 14 4 × 9 + 3 (- 12) 7 (- 10) + 5 × 14 7 × 9 + 5 (- 12) = 2 0 0 3

Answer: 4 3 7 5 - 28 93 38 - 126 7 3 2 1 = 2 0 0 3

Multiplying a Matrix by a Number

Definition 2The product of the matrix A by the number k is the matrix B \u003d A k of the same size, which is obtained from the original by multiplying by a given number of all its elements:

b i , j = k × a i , j

Properties of multiplying a matrix by a number:

- 1 × A = A

- 0 × A = zero matrix

- k(A + B) = kA + kB

- (k + n) A = k A + n A

- (k×n)×A = k(n×A)

Find the product of the matrix A \u003d 4 2 9 0 by 5.

5 A = 5 4 2 9 0 5 × 4 5 × 2 5 × 9 5 × 0 = 20 10 45 0

Multiplication of a matrix by a vector

Definition 3To find the product of a matrix and a vector, you need to multiply according to the row-by-column rule:

- if you multiply a matrix by a column vector, the number of columns in the matrix must match the number of rows in the column vector;

- the result of multiplication of a column vector is only a column vector:

A B = a 11 a 12 ⋯ a 1 n a 21 a 22 ⋯ a 2 n ⋯ ⋯ ⋯ ⋯ a m 1 a m 2 ⋯ a m n b 1 b 2 ⋯ b 1 n = a 11 × b 1 + a 12 × b 2 + ⋯ + a 1 n × b n a 21 × b 1 + a 22 × b 2 + ⋯ + a 2 n × b n ⋯ ⋯ ⋯ ⋯ a m 1 × b 1 + a m 2 × b 2 + ⋯ + a m n × b n = c 1 c 2 ⋯ c 1 m

- if you multiply a matrix by a row vector, then the matrix to be multiplied must be exclusively a column vector, and the number of columns must match the number of columns in the row vector:

A B = a a ⋯ a b b ⋯ b = a 1 × b 1 a 1 × b 2 ⋯ a 1 × b n a 2 × b 1 a 2 × b 2 ⋯ a 2 × b n ⋯ ⋯ ⋯ ⋯ a n × b 1 a n × b 2 ⋯ a n × b n = c 11 c 12 ⋯ c 1 n c 21 c 22 ⋯ c 2 n ⋯ ⋯ ⋯ ⋯ c n 1 c n 2 ⋯ c n n

Example 5

Find the product of matrix A and column vector B:

A B \u003d 2 4 0 - 2 1 3 - 1 0 1 1 2 - 1 \u003d 2 × 1 + 4 × 2 + 0 × (- 1) - 2 × 1 + 1 × 2 + 3 × (- 1) - 1 × 1 + 0 × 2 + 1 × (- 1) = 2 + 8 + 0 - 2 + 2 - 3 - 1 + 0 - 1 = 10 - 3 - 2

Example 6

Find the product of matrix A and row vector B:

A \u003d 3 2 0 - 1, B \u003d - 1 1 0 2

A B = 3 2 0 1 × - 1 1 0 2 = 3 × (- 1) 3 × 1 3 × 0 3 × 2 2 × (- 1) 2 × 1 2 × 0 2 × 2 0 × (- 1) 0 × 1 0 × 0 0 × 2 1 × (- 1) 1 × 1 1 × 0 1 × 2 = - 3 3 0 6 - 2 2 0 4 0 0 0 0 - 1 1 0 2

Answer: A B \u003d - 3 3 0 6 - 2 2 0 4 0 0 0 0 - 1 1 0 2

If you notice a mistake in the text, please highlight it and press Ctrl+Enter

So, in the previous lesson, we analyzed the rules for adding and subtracting matrices. These are such simple operations that most students understand them literally right off the bat.

However, you rejoice early. The freebie is over - let's move on to multiplication. I’ll warn you right away: multiplying two matrices is not at all multiplying the numbers in cells with the same coordinates, as you might think. Everything is much more fun here. And you have to start with preliminary definitions.

Consistent matrices

One of the most important characteristics of a matrix is its size. We have already talked about this a hundred times: $A=\left[ m\times n \right]$ means that the matrix has exactly $m$ rows and $n$ columns. We have already discussed how not to confuse rows with columns. Now something else is important.

Definition. Matrices of the form $A=\left[ m\times n \right]$ and $B=\left[ n\times k \right]$, in which the number of columns in the first matrix is the same as the number of rows in the second, are called consistent.

Once again: the number of columns in the first matrix is equal to the number of rows in the second! From this we get two conclusions at once:

- We care about the order of the matrices. For example, the matrices $A=\left[ 3\times 2 \right]$ and $B=\left[ 2\times 5 \right]$ are consistent (2 columns in the first matrix and 2 rows in the second), but vice versa — the matrices $B=\left[ 2\times 5 \right]$ and $A=\left[ 3\times 2 \right]$ are no longer consistent (5 columns in the first matrix are, as it were, not 3 rows in the second ).

- Consistency is easy to check if you write out all the dimensions one after another. Using the example from the previous paragraph: "3 2 2 5" - the same numbers are in the middle, so the matrices are consistent. But “2 5 3 2” is not agreed, because there are different numbers in the middle.

Besides, the captain seems to hint that square matrices of the same size $\left[ n\times n \right]$ are always consistent.

In mathematics, when the order of enumeration of objects is important (for example, in the definition discussed above, the order of matrices is important), one often speaks of ordered pairs. We met them back in school: I think it's a no brainer that the coordinates $\left(1;0 \right)$ and $\left(0;1 \right)$ define different points on surface.

So: coordinates are also ordered pairs, which are made up of numbers. But nothing prevents you from making such a pair of matrices. Then it will be possible to say: "An ordered pair of matrices $\left(A;B \right)$ is consistent if the number of columns in the first matrix is the same as the number of rows in the second."

Well, so what?

Definition of multiplication

Consider two consistent matrices: $A=\left[ m\times n \right]$ and $B=\left[ n\times k \right]$. And we define for them the operation of multiplication.

Definition. The product of two consistent matrices $A=\left[ m\times n \right]$ and $B=\left[ n\times k \right]$ is the new matrix $C=\left[ m\times k \right] $, the elements of which are calculated according to the formula:

\[\begin(align) & ((c)_(i;j))=((a)_(i;1))\cdot ((b)_(1;j))+((a)_ (i;2))\cdot ((b)_(2;j))+\ldots +((a)_(i;n))\cdot ((b)_(n;j))= \\ & =\sum\limits_(t=1)^(n)(((a)_(i;t))\cdot ((b)_(t;j))) \end(align)\]

Such a product is denoted in the standard way: $C=A\cdot B$.

For those who see this definition for the first time, two questions immediately arise:

- What kind of wild game is this?

- Why is it so difficult?

Well, first things first. Let's start with the first question. What do all these indexes mean? And how not to make mistakes when working with real matrices?

First of all, we note that the long line for calculating $((c)_(i;j))$ (specially put a semicolon between the indices so as not to get confused, but you don’t need to put them in general - I myself got tired of typing the formula in the definition) really boils down to a simple rule:

- Take the $i$-th row in the first matrix;

- Take the $j$-th column in the second matrix;

- We get two sequences of numbers. We multiply the elements of these sequences with the same numbers, and then add the resulting products.

This process is easy to understand from the picture:

Scheme for multiplying two matrices

Scheme for multiplying two matrices Once again: we fix the row $i$ in the first matrix, the column $j$ in the second matrix, multiply the elements with the same numbers, and then add the resulting products - we get $((c)_(ij))$. And so for all $1\le i\le m$ and $1\le j\le k$. Those. there will be $m\times k$ such "perversions" in total.

In fact, we have already met with matrix multiplication in school curriculum, only in a much reduced form. Let vectors be given:

\[\begin(align) & \vec(a)=\left(((x)_(a));((y)_(a));((z)_(a)) \right); \\ & \overrightarrow(b)=\left(((x)_(b));((y)_(b));((z)_(b)) \right). \\ \end(align)\]

Then their scalar product will be exactly the sum of pairwise products:

\[\overrightarrow(a)\times \overrightarrow(b)=((x)_(a))\cdot ((x)_(b))+((y)_(a))\cdot ((y )_(b))+((z)_(a))\cdot ((z)_(b))\]

In fact, in those distant years, when the trees were greener and the sky brighter, we simply multiplied the row vector $\overrightarrow(a)$ by the column vector $\overrightarrow(b)$.

Nothing has changed today. It's just that now there are more of these row and column vectors.

But enough theory! Let's look at real examples. And let's start from the very simple case are square matrices.

Multiplication of square matrices

Task 1. Perform the multiplication:

\[\left[ \begin(array)(*(35)(r)) 1 & 2 \\ -3 & 4 \\\end(array) \right]\cdot \left[ \begin(array)(* (35)(r)) -2 & 4 \\ 3 & 1 \\\end(array) \right]\]

Decision. So, we have two matrices: $A=\left[ 2\times 2 \right]$ and $B=\left[ 2\times 2 \right]$. It is clear that they are consistent (square matrices of the same size are always consistent). So we do the multiplication:

\[\begin(align) & \left[ \begin(array)(*(35)(r)) 1 & 2 \\ -3 & 4 \\\end(array) \right]\cdot \left[ \ begin(array)(*(35)(r)) -2 & 4 \\ 3 & 1 \\\end(array) \right]=\left[ \begin(array)(*(35)(r)) 1\cdot \left(-2 \right)+2\cdot 3 & 1\cdot 4+2\cdot 1 \\ -3\cdot \left(-2 \right)+4\cdot 3 & -3\cdot 4+4\cdot 1 \\\end(array) \right]= \\ & =\left[ \begin(array)(*(35)(r)) 4 & 6 \\ 18 & -8 \\\ end(array)\right]. \end(align)\]

That's all!

Answer: $\left[ \begin(array)(*(35)(r))4 & 6 \\ 18 & -8 \\\end(array) \right]$.

Task 2. Perform the multiplication:

\[\left[ \begin(matrix) 1 & 3 \\ 2 & 6 \\\end(matrix) \right]\cdot \left[ \begin(array)(*(35)(r))9 & 6 \\ -3 & -2 \\\end(array) \right]\]

Decision. Again, consistent matrices, so we perform the following actions:\[\]

\[\begin(align) & \left[ \begin(matrix) 1 & 3 \\ 2 & 6 \\\end(matrix) \right]\cdot \left[ \begin(array)(*(35)( r)) 9 & 6 \\ -3 & -2 \\\end(array) \right]=\left[ \begin(array)(*(35)(r)) 1\cdot 9+3\cdot \ left(-3 \right) & 1\cdot 6+3\cdot \left(-2 \right) \\ 2\cdot 9+6\cdot \left(-3 \right) & 2\cdot 6+6\ cdot \left(-2 \right) \\\end(array) \right]= \\ & =\left[ \begin(matrix) 0 & 0 \\ 0 & 0 \\\end(matrix) \right] . \end(align)\]

As you can see, the result is a matrix filled with zeros

Answer: $\left[ \begin(matrix) 0 & 0 \\ 0 & 0 \\\end(matrix) \right]$.

From the above examples, it is obvious that matrix multiplication is not such a complicated operation. At least for 2 by 2 square matrices.

In the process of calculations, we compiled an intermediate matrix, where we directly painted what numbers are included in a particular cell. This is exactly what should be done when solving real problems.

Basic properties of the matrix product

In a nutshell. Matrix multiplication:

- Non-commutative: $A\cdot B\ne B\cdot A$ in general. There are, of course, special matrices for which the equality $A\cdot B=B\cdot A$ (for example, if $B=E$ is the identity matrix), but in the vast majority of cases this does not work;

- Associative: $\left(A\cdot B \right)\cdot C=A\cdot \left(B\cdot C \right)$. There are no options here: adjacent matrices can be multiplied without worrying about what is to the left and to the right of these two matrices.

- Distributively: $A\cdot \left(B+C \right)=A\cdot B+A\cdot C$ and $\left(A+B \right)\cdot C=A\cdot C+B\cdot C $

And now - all the same, but in more detail.

Matrix multiplication is a lot like classical number multiplication. But there are differences, the most important of which is that matrix multiplication is, generally speaking, non-commutative.

Consider again the matrices from Problem 1. We already know their direct product:

\[\left[ \begin(array)(*(35)(r)) 1 & 2 \\ -3 & 4 \\\end(array) \right]\cdot \left[ \begin(array)(* (35)(r)) -2 & 4 \\ 3 & 1 \\\end(array) \right]=\left[ \begin(array)(*(35)(r))4 & 6 \\ 18 & -8 \\\end(array) \right]\]

But if we swap the matrices, we get a completely different result:

\[\left[ \begin(array)(*(35)(r)) -2 & 4 \\ 3 & 1 \\\end(array) \right]\cdot \left[ \begin(array)(* (35)(r)) 1 & 2 \\ -3 & 4 \\\end(array) \right]=\left[ \begin(matrix) -14 & 4 \\ 0 & 10 \\\end(matrix )\right]\]

It turns out that $A\cdot B\ne B\cdot A$. Also, the multiplication operation is only defined for the consistent matrices $A=\left[ m\times n \right]$ and $B=\left[ n\times k \right]$, but no one guaranteed that they would remain consistent, if they are swapped. For example, the matrices $\left[ 2\times 3 \right]$ and $\left[ 3\times 5 \right]$ are quite consistent in this order, but the same matrices $\left[ 3\times 5 \right] $ and $\left[ 2\times 3 \right]$ written in reverse order no longer match. Sadness :(

Among square matrices of a given size $n$, there will always be those that give the same result both when multiplied in direct and in reverse order. How to describe all such matrices (and how many of them in general) is a topic for a separate lesson. Today we will not talk about it. :)

However, matrix multiplication is associative:

\[\left(A\cdot B \right)\cdot C=A\cdot \left(B\cdot C \right)\]

Therefore, when you need to multiply several matrices in a row at once, it is not at all necessary to do it ahead of time: it is quite possible that some adjacent matrices, when multiplied, give interesting result. For example, a zero matrix, as in Problem 2 discussed above.

In real problems, most often one has to multiply square matrices of size $\left[ n\times n \right]$. The set of all such matrices is denoted by $((M)^(n))$ (i.e., the entries $A=\left[ n\times n \right]$ and \ mean the same thing), and it will definitely contain matrix $E$, which is called the identity matrix.

Definition. The identity matrix of size $n$ is a matrix $E$ such that for any square matrix $A=\left[ n\times n \right]$ the equality holds:

Such a matrix always looks the same: there are units on its main diagonal, and zeros in all other cells.

\[\begin(align) & A\cdot \left(B+C \right)=A\cdot B+A\cdot C; \\ & \left(A+B \right)\cdot C=A\cdot C+B\cdot C. \\ \end(align)\]

In other words, if you need to multiply one matrix by the sum of two others, then you can multiply it by each of these "other two", and then add the results. In practice, you usually have to perform the inverse operation: we notice the same matrix, take it out of the bracket, perform addition, and thereby simplify our life. :)

Note that to describe distributivity, we had to write two formulas: where the sum is in the second factor and where the sum is in the first. This is precisely due to the fact that matrix multiplication is non-commutative (and in general, in non-commutative algebra, there are a lot of all sorts of jokes that do not even come to mind when working with ordinary numbers). And if, for example, you need to write down this property during the exam, then be sure to write both formulas, otherwise the teacher may get a little angry.

Okay, these were all fairy tales about square matrices. What about rectangles?

The case of rectangular matrices

But nothing - everything is the same as with square ones.

Task 3. Perform the multiplication:

\[\left[ \begin(matrix) \begin(matrix) 5 \\ 2 \\ 3 \\\end(matrix) & \begin(matrix) 4 \\ 5 \\ 1 \\\end(matrix) \ \\end(matrix) \right]\cdot \left[ \begin(array)(*(35)(r)) -2 & 5 \\ 3 & 4 \\\end(array) \right]\]

Decision. We have two matrices: $A=\left[ 3\times 2 \right]$ and $B=\left[ 2\times 2 \right]$. Let's write the numbers indicating the sizes in a row:

As you can see, the central two numbers are the same. This means that the matrices are consistent, and they can be multiplied. And at the output we get the matrix $C=\left[ 3\times 2 \right]$:

\[\begin(align) & \left[ \begin(matrix) \begin(matrix) 5 \\ 2 \\ 3 \\\end(matrix) & \begin(matrix) 4 \\ 5 \\ 1 \\ \end(matrix) \\\end(matrix) \right]\cdot \left[ \begin(array)(*(35)(r)) -2 & 5 \\ 3 & 4 \\\end(array) \right]=\left[ \begin(array)(*(35)(r)) 5\cdot \left(-2 \right)+4\cdot 3 & 5\cdot 5+4\cdot 4 \\ 2 \cdot \left(-2 \right)+5\cdot 3 & 2\cdot 5+5\cdot 4 \\ 3\cdot \left(-2 \right)+1\cdot 3 & 3\cdot 5+1 \cdot 4 \\\end(array) \right]= \\ & =\left[ \begin(array)(*(35)(r)) 2 & 41 \\ 11 & 30 \\ -3 & 19 \ \\end(array)\right]. \end(align)\]

Everything is clear: the final matrix has 3 rows and 2 columns. Quite $=\left[ 3\times 2 \right]$.

Answer: $\left[ \begin(array)(*(35)(r)) \begin(array)(*(35)(r)) 2 \\ 11 \\ -3 \\\end(array) & \begin(matrix) 41 \\ 30 \\ 19 \\\end(matrix) \\\end(array) \right]$.

Now consider one of the best training tasks for those who are just starting to work with matrices. In it, you need not just to multiply some two tablets, but first to determine: is such a multiplication permissible?

Problem 4. Find all possible pairwise products of matrices:

\\]; $B=\left[ \begin(matrix) \begin(matrix) 0 \\ 2 \\ 0 \\ 4 \\\end(matrix) & \begin(matrix) 1 \\ 0 \\ 3 \\ 0 \ \\end(matrix) \\\end(matrix) \right]$; $C=\left[ \begin(matrix)0 & 1 \\ 1 & 0 \\\end(matrix) \right]$.

Decision. First, let's write down the dimensions of the matrices:

\;\ B=\left[ 4\times 2 \right];\ C=\left[ 2\times 2 \right]\]

We get that the matrix $A$ can be matched only with the matrix $B$, since the number of columns in $A$ is 4, and only $B$ has this number of rows. Therefore, we can find the product:

\\cdot \left[ \begin(array)(*(35)(r)) 0 & 1 \\ 2 & 0 \\ 0 & 3 \\ 4 & 0 \\\end(array) \right]=\ left[ \begin(array)(*(35)(r))-10 & 7 \\ 10 & 7 \\\end(array) \right]\]

I suggest that the reader perform the intermediate steps on their own. I will only note that it is better to determine the size of the resulting matrix in advance, even before any calculations:

\\cdot \left[ 4\times 2 \right]=\left[ 2\times 2 \right]\]

In other words, we simply remove the "transitional" coefficients that ensured the consistency of the matrices.

What other options are possible? It is certainly possible to find $B\cdot A$, since $B=\left[ 4\times 2 \right]$, $A=\left[ 2\times 4 \right]$, so the ordered pair $\left(B ;A \right)$ is consistent, and the dimension of the product will be:

\\cdot \left[ 2\times 4 \right]=\left[ 4\times 4 \right]\]

In short, the output will be a matrix $\left[ 4\times 4 \right]$, whose coefficients are easy to calculate:

\\cdot \left[ \begin(array)(*(35)(r)) 1 & -1 & 2 & -2 \\ 1 & 1 & 2 & 2 \\\end(array) \right]=\ left[ \begin(array)(*(35)(r))1 & 1 & 2 & 2 \\ 2 & -2 & 4 & -4 \\ 3 & 3 & 6 & 6 \\ 4 & -4 & 8 & -8 \\\end(array) \right]\]

Obviously, you can also match $C\cdot A$ and $B\cdot C$, and that's it. Therefore, we simply write the resulting products:

It was easy.:)

Answer: $AB=\left[ \begin(array)(*(35)(r)) -10 & 7 \\ 10 & 7 \\\end(array) \right]$; $BA=\left[ \begin(array)(*(35)(r)) 1 & 1 & 2 & 2 \\ 2 & -2 & 4 & -4 \\ 3 & 3 & 6 & 6 \\ 4 & -4 & 8 & -8 \\\end(array) \right]$; $CA=\left[ \begin(array)(*(35)(r)) 1 & 1 & 2 & 2 \\ 1 & -1 & 2 & -2 \\\end(array) \right]$; $BC=\left[ \begin(array)(*(35)(r))1 & 0 \\ 0 & 2 \\ 3 & 0 \\ 0 & 4 \\\end(array) \right]$.

In general, I highly recommend doing this task yourself. And another similar task that is in homework. These seemingly simple thoughts will help you work out all the key steps in matrix multiplication.

But the story doesn't end there. Let's move on to special cases of multiplication. :)

Row vectors and column vectors

One of the most common matrix operations is multiplication by a matrix that has one row or one column.

Definition. A column vector is a $\left[ m\times 1 \right]$ matrix, i.e. consisting of several rows and only one column.

A row vector is a matrix of size $\left[ 1\times n \right]$, i.e. consisting of one row and several columns.

In fact, we have already met with these objects. For example, an ordinary three-dimensional vector from stereometry $\overrightarrow(a)=\left(x;y;z \right)$ is nothing but a row vector. From a theoretical point of view, there is almost no difference between rows and columns. You need to be careful only when coordinating with the surrounding multiplier matrices.

Task 5. Multiply:

\[\left[ \begin(array)(*(35)(r)) 2 & -1 & 3 \\ 4 & 2 & 0 \\ -1 & 1 & 1 \\\end(array) \right] \cdot \left[ \begin(array)(*(35)(r)) 1 \\ 2 \\ -1 \\\end(array) \right]\]

Decision. We have a product of consistent matrices: $\left[ 3\times 3 \right]\cdot \left[ 3\times 1 \right]=\left[ 3\times 1 \right]$. Find this piece:

\[\left[ \begin(array)(*(35)(r)) 2 & -1 & 3 \\ 4 & 2 & 0 \\ -1 & 1 & 1 \\\end(array) \right] \cdot \left[ \begin(array)(*(35)(r)) 1 \\ 2 \\ -1 \\\end(array) \right]=\left[ \begin(array)(*(35 )(r)) 2\cdot 1+\left(-1 \right)\cdot 2+3\cdot \left(-1 \right) \\ 4\cdot 1+2\cdot 2+0\cdot 2 \ \ -1\cdot 1+1\cdot 2+1\cdot \left(-1 \right) \\\end(array) \right]=\left[ \begin(array)(*(35)(r) ) -3 \\ 8 \\ 0 \\\end(array) \right]\]

Answer: $\left[ \begin(array)(*(35)(r))-3 \\ 8 \\ 0 \\\end(array) \right]$.

Task 6. Perform the multiplication:

\[\left[ \begin(array)(*(35)(r)) 1 & 2 & -3 \\\end(array) \right]\cdot \left[ \begin(array)(*(35) (r)) 3 & 1 & -1 \\ 4 & -1 & 3 \\ 2 & 6 & 0 \\\end(array) \right]\]

Decision. Again everything is consistent: $\left[ 1\times 3 \right]\cdot \left[ 3\times 3 \right]=\left[ 1\times 3 \right]$. We consider the work:

\[\left[ \begin(array)(*(35)(r)) 1 & 2 & -3 \\\end(array) \right]\cdot \left[ \begin(array)(*(35) (r)) 3 & 1 & -1 \\ 4 & -1 & 3 \\ 2 & 6 & 0 \\\end(array) \right]=\left[ \begin(array)(*(35)( r))5 & -19 & 5 \\\end(array) \right]\]

Answer: $\left[ \begin(matrix) 5 & -19 & 5 \\\end(matrix) \right]$.

As you can see, when multiplying a row vector and a column vector by a square matrix, the output is always a row or column of the same size. This fact has many applications, from solving linear equations to all sorts of coordinate transformations (which eventually also come down to systems of equations, but let's not talk about sad things).

I think everything was obvious here. Let's move on to the final part of today's lesson.

Matrix exponentiation

Among all multiplication operations special attention deserves exponentiation - this is when we multiply the same object by itself several times. Matrices are no exception, they can also be raised to various powers.

Such works are always coordinated:

\\cdot \left[ n\times n \right]=\left[ n\times n \right]\]

And they are designated in the same way as ordinary degrees:

\[\begin(align) & A\cdot A=((A)^(2)); \\ & A\cdot A\cdot A=((A)^(3)); \\ & \underbrace(A\cdot A\cdot \ldots \cdot A)_(n)=((A)^(n)). \\ \end(align)\]

At first glance, everything is simple. Let's see how it looks in practice:

Task 7. Raise the matrix to the specified power:

$((\left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right])^(3))$

Decision. OK, let's build. Let's square it first:

\[\begin(align) & ((\left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right])^(2))=\left[ \begin(matrix ) 1 & 1 \\ 0 & 1 \\\end(matrix) \right]\cdot \left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right]= \\ & =\left[ \begin(array)(*(35)(r)) 1\cdot 1+1\cdot 0 & 1\cdot 1+1\cdot 1 \\ 0\cdot 1+1\cdot 0 & 0\cdot 1+1\cdot 1 \\\end(array) \right]= \\ & =\left[ \begin(array)(*(35)(r)) 1 & 2 \\ 0 & 1 \ \\end(array) \right] \end(align)\]

\[\begin(align) & ((\left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right])^(3))=((\left[ \begin (matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right])^(3))\cdot \left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\end( matrix) \right]= \\ & =\left[ \begin(array)(*(35)(r)) 1 & 2 \\ 0 & 1 \\\end(array) \right]\cdot \left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right]= \\ & =\left[ \begin(array)(*(35)(r)) 1 & 3 \\ 0 & 1 \\\end(array) \right] \end(align)\]

That's all.:)

Answer: $\left[ \begin(matrix)1 & 3 \\ 0 & 1 \\\end(matrix) \right]$.

Problem 8. Raise the matrix to the specified power:

\[((\left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right])^(10))\]

Decision. Just don’t cry now about the fact that “the degree is too high”, “the world is not fair” and “the teachers have completely lost their banks”. In fact, everything is easy:

\[\begin(align) & ((\left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right])^(10))=((\left[ \begin (matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right])^(3))\cdot ((\left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\ end(matrix) \right])^(3))\cdot ((\left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right])^(3))\ cdot \left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right]= \\ & =\left(\left[ \begin(matrix) 1 & 3 \\ 0 & 1 \\\end(matrix) \right]\cdot \left[ \begin(matrix) 1 & 3 \\ 0 & 1 \\\end(matrix) \right] \right)\cdot \left(\left[ \begin(matrix) 1 & 3 \\ 0 & 1 \\\end(matrix) \right]\cdot \left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right ] \right)= \\ & =\left[ \begin(matrix) 1 & 6 \\ 0 & 1 \\\end(matrix) \right]\cdot \left[ \begin(matrix) 1 & 4 \\ 0 & 1 \\\end(matrix) \right]= \\ & =\left[ \begin(matrix) 1 & 10 \\ 0 & 1 \\\end(matrix) \right] \end(align)\ ]

Note that in the second line we used multiplication associativity. Actually, we used it in the previous task, but there it was implicit.

Answer: $\left[ \begin(matrix) 1 & 10 \\ 0 & 1 \\\end(matrix) \right]$.

As you can see, there is nothing complicated in raising a matrix to a power. The last example can be summarized:

\[((\left[ \begin(matrix) 1 & 1 \\ 0 & 1 \\\end(matrix) \right])^(n))=\left[ \begin(array)(*(35) (r)) 1 & n \\ 0 & 1 \\\end(array) \right]\]

This fact is easy to prove through mathematical induction or direct multiplication. However, it is far from always possible to catch such patterns when raising to a power. Therefore, be careful: it is often easier and faster to multiply several matrices "blank" than to look for some patterns there.

In general, do not look for a higher meaning where there is none. In conclusion, consider the exponentiation of the matrix bigger size- as much as $\left[ 3\times 3 \right]$.

Problem 9. Raise the matrix to the specified power:

\[((\left[ \begin(matrix) 0 & 1 & 1 \\ 1 & 0 & 1 \\ 1 & 1 & 0 \\\end(matrix) \right])^(3))\]

Decision. Let's not look for patterns. We work "through":

\[((\left[ \begin(matrix) 0 & 1 & 1 \\ 1 & 0 & 1 \\ 1 & 1 & 0 \\\end(matrix) \right])^(3))=(( \left[ \begin(matrix) 0 & 1 & 1 \\ 1 & 0 & 1 \\ 1 & 1 & 0 \\\end(matrix) \right])^(2))\cdot \left[ \begin (matrix)0 & 1 & 1 \\ 1 & 0 & 1 \\ 1 & 1 & 0 \\\end(matrix) \right]\]

Let's start by squaring this matrix:

\[\begin(align) & ((\left[ \begin(matrix) 0 & 1 & 1 \\ 1 & 0 & 1 \\ 1 & 1 & 0 \\\end(matrix) \right])^( 2))=\left[ \begin(matrix) 0 & 1 & 1 \\ 1 & 0 & 1 \\ 1 & 1 & 0 \\\end(matrix) \right]\cdot \left[ \begin(matrix ) 0 & 1 & 1 \\ 1 & 0 & 1 \\ 1 & 1 & 0 \\\end(matrix) \right]= \\ & =\left[ \begin(array)(*(35)(r )) 2 & 1 & 1 \\ 1 & 2 & 1 \\ 1 & 1 & 2 \\\end(array) \right] \end(align)\]

Now let's cube it:

\[\begin(align) & ((\left[ \begin(matrix) 0 & 1 & 1 \\ 1 & 0 & 1 \\ 1 & 1 & 0 \\\end(matrix) \right])^( 3))=\left[ \begin(array)(*(35)(r)) 2 & 1 & 1 \\ 1 & 2 & 1 \\ 1 & 1 & 2 \\\end(array) \right] \cdot \left[ \begin(matrix) 0 & 1 & 1 \\ 1 & 0 & 1 \\ 1 & 1 & 0 \\\end(matrix) \right]= \\ & =\left[ \begin( array)(*(35)(r)) 2 & 3 & 3 \\ 3 & 2 & 3 \\ 3 & 3 & 2 \\\end(array) \right] \end(align)\]

That's all. Problem solved.

Answer: $\left[ \begin(matrix) 2 & 3 & 3 \\ 3 & 2 & 3 \\ 3 & 3 & 2 \\\end(matrix) \right]$.

As you can see, the amount of calculations has become larger, but the meaning has not changed at all. :)

This lesson can end. Next time we will consider the inverse operation: we will look for the original multipliers using the existing product.

As you probably already guessed, we will talk about the inverse matrix and methods for finding it.