Weibull density distribution. Weibull distribution (weak link model). MTBF

Probability distributions of random variables ; characterized by the distribution function

where is the distribution curve shape parameter, is the scale parameter, is the shift parameter. The family of distributions (*) is named after W. Weibull, who first used it to approximate experimental data on the tensile strength of steel during fatigue tests and proposed methods for estimating the distribution parameters (*). V. r. belongs to the asymptotic distribution of the third type of extreme terms variation series. It is widely used to describe the failure patterns of ball bearings, vacuum devices, electronic components. V.'s special cases of river. are exponential (p=1) and Rayleigh (p=2) distributions. Distribution function curves (*) do not belong to the family of Pearson distributions. There are auxiliary tables for calculating the Weibull distribution function (see ). When the level quantile q is equal to

where is the gamma function; variation, skewness, and kurtosis do not depend on , which makes it easier to tabulate them and create auxiliary tables to obtain parameter estimates. At V. r. is unimodal, equal to , and the failure hazard function does not decrease. For , the function is monotonically decreasing. You can build like this. called Weibull probability paper (see ). On it it transforms into a straight line, when the image has a concavity, and when it has a convexity. Estimates of the parameters of V. r. the quantile method leads to equations that are much simpler than the maximum likelihood method. The joint asymptotic the efficiency of estimating the parameters and (at ) by the quantile method is maximum (and equal to 0.64) at. using the 0.24 and 0.93 level quantiles. The distribution function (*) is well approximated by the distribution function of the lognormal distribution

![]()

( - distribution function of the normalized distribution,):

Lit:Weibull W., A statistical theory of the strength of materials, Stockh., 1939; Gnedenko B. V., Belyaev Yu. K., Solovyov A. D., Mathematical Methods in the theory of reliability, M., 1965; Johnson L., The statistical treatment of fatigue experiments, Amst., 1964; Kramer G Mathematical methods of statistics, trans. from English, 2nd ed., M, 1975. Yu. K. Belyaev, E. V. Chepurin.

Mathematical encyclopedia. - M.: Soviet Encyclopedia. I. M. Vinogradov. 1977-1985.

See what "WEIBULL DISTRIBUTION" is in other dictionaries:

distribution- 3.38 allocation (allocation): The procedure used in the design of a system (object) and aimed at distributing requirements for the values of the characteristics of an object among components and subsystems in accordance with an established criterion. ... ...

Weibull distribution- 1.48. Weibull distribution; distribution of extreme values of type III Probability distribution of continuous random variable X with distribution function: where x ³ a; y = (x a)/b; and parameters ¥< a < +¥, k >0, b > 0. Note... Dictionary-reference book of terms of normative and technical documentation

Probability density Distribution function Notation (((notation))) Parameters scale factor ... Wikipedia

Weibull distribution

The two-parameter Weibull distribution is more flexible than the exponential distribution, which can be viewed as special case first. Weibull density

At 1/t0 = and m = 1, Eq. (8) turns into an exponential distribution density. The value 1/t0 determines the scale, and m - the asymmetry (shape) of the distribution.

After integrating (8) from 0 to t, we obtain the distribution function F(t) equal to Q(t) :

Consequently,

The ratio of density (8) and probability (10) gives the failure rate

The main plots of the Weibull distribution are shown in Fig.4.

The two-parameter Weibull distribution has exceptional flexibility in approximation empirical distributions and therefore is widely used in practical applications of reliability theory. It is used in describing the laws of reliability, both in the run-in area and in the analysis of aging and wear processes.

The mean time between failures in the Weibull distribution is determined from the condition and is equal to

Fig.3.4. Weibull distribution plots

where - gamma - function;

Normal distribution

The two-parameter normal (Gaussian) distribution is extremely widely used in practical problems of reliability theory. The parameters of this distribution are - expected value random variable and - standard deviation. The density of the normal distribution is determined by the dependence

The distribution function F(x) (Fig.3.5) under the normal law is determined by the integral of the density f(x) with integration limits from - to + .

The random variable t, as in all reliability problems, has the meaning of the operating time of the object and therefore is defined on the positive semiaxis of numbers, and the normal law, as already noted, is defined on the entire numerical axis from - to +. In this regard, in the theory of reliability, a truncated normal law is considered, the density of which is determined by multiplying (3.13) by a constant factor

where, a, b are the left and right boundaries of the truncated distribution.

F(a),F(b) - values of distribution functions of the normal law on the left and right truncation boundaries.

The meaning of the constant factor c becomes clear when considering the graph of the normal distribution density shown in Fig.6.

Fig.5.

It is known that the area under the distribution density curve should always be equal to one, that is, in this case. As shown in Fig. 6, to ensure this condition, the density curve of the truncated normal law has to be shifted up and to the right by multiplying the original density of the normal law by a constant factor. Accordingly, the main parameters will change: mathematical expectation and standard deviation. Calculations show that with the ratio /< 0.5 (коэффициент вариации) постоянный множитель c для усечённо- нормального закона близок к единице. Поэтому во многих практических задачах теории надёжности пользуются параметрами нормального закона распределения случайной наработки объекта до отказа. При этом математическое ожидание отождествляют со средней наработкой до отказа Т0.

Fig.6.

The probability of failure-free operation under a normal distribution is equal to

The probability of failure is calculated by the formula (at s 1)

The failure rate is determined by the ratio of density to the probability of failure-free operation

The integrals in expressions (14)…(16) are not expressed in terms of elementary functions. Usually they are represented through the probability integral of the parameter

for which the tables are made.

Taking into account (17), the probability of failure-free operation under the normal law is determined by the formula

Lecture questions:

Introduction

Reliability models of technical systems

Laws of uptime distribution

Introduction

Quantitative methods for studying technical objects, especially at the stages of their design and creation, always require the construction of mathematical models of processes and phenomena. A mathematical model is usually understood as an interconnected set of analytical and logical expressions, as well as initial and boundary conditions that reflect, with a certain approximation, the real processes of the object's functioning. A mathematical model is an information analogue of a full-scale object, with the help of which you can get knowledge about the project being created. The ability to make predictions is considered to be a defining property of the model. All this fully applies to mathematical models of reliability.

The mathematical model of reliability is understood as such an analytically represented system that gives full information about the reliability of the object. When building a model, the process of changing reliability in a certain way is simplified and schematized. From a large number of factors acting on a full-scale object, the main ones are singled out, the change of which can cause noticeable changes in reliability. Relationships between the constituent parts of the system can be represented by analytical dependencies also with certain approximations. As a result, the conclusions obtained on the basis of the study of the object reliability model contain some uncertainty.

The more successfully the model is selected, the better it reflects the characteristic features of the functioning of the object, the more accurately its reliability will be assessed and reasonable recommendations for decision making will be obtained.

1. Reliability models of technical systems

At present, there are general principles for constructing mathematical models of reliability. The model is built only for a certain object, or more precisely for a group of objects of the same type, taking into account the features of their future operation. It must meet the following requirements:

The model should take into account the maximum number of factors that affect the reliability of the object;

The model should be simple enough to obtain, using typical computational tools, output reliability indicators depending on the change in input factors.

The inconsistency of these requirements does not allow to completely formalize the construction of models, which makes the process of creating models to a certain extent creative.

There are many classifications of reliability models, one of which is shown in Figure 1 1 .

Fig.1. Classification of reliability models

As follows from Fig. 1, all models can be divided into two large groups: object reliability models and element models. Element reliability models have more physical content and are more specific for elements of a certain design. In these models, the strength characteristics of materials are used, the loads acting on the structure are taken into account, and the influence of operating conditions on the operation of elements is considered. In the study of these models, a formalized description of the processes of occurrence of failures is obtained depending on the selected factors.

Reliability models of objects are created for a formalized description from the standpoint of reliability of the process of their functioning as a process of interaction of the elements that make up a given object. In such a model, the interaction of elements is carried out only through the most significant connections that affect the overall reliability of the object.

There are parametric object reliability models and models in terms of element failures. Parametric models contain functions of random parameters of elements, which makes it possible to obtain the desired object reliability indicator at the output of the model. In turn, the parameters of the elements can be functions of the operating time of the object.

Models created in terms of element failures are the most formalized and are the main ones in the analysis of the reliability of complex technical systems. A necessary condition for creating such models is a clear description of the signs of failure of each element of the system. The model reflects the impact of the failure of an individual element on the reliability of the system.

According to the principles of implementation of models, they differ in analytical, statistical and combined (otherwise functional - statistical).

Analytical models contain analytical dependencies between the parameters characterizing the reliability of the system and the output indicator of reliability. To obtain such dependences, it is necessary to limit the number of significant factors and significantly simplify the physical picture of the process of changing reliability. As a result, analytical models can describe with sufficient accuracy only relatively simple problems of changing system reliability indicators. With the complication of the system and the increase in the number of factors affecting reliability, statistical models come to the fore.

The method of statistical modeling allows solving multidimensional problems of great complexity in a short time and with acceptable accuracy. With the development of computer technology, the possibilities of this method are expanding.

The combined method, which provides for the creation of functional-statistical models, has even greater possibilities. In such models, analytical models are created for the elements, and the system as a whole is modeled in a statistical mode.

The choice of one or another mathematical model depends on the objectives of the study of the reliability of the object, on the availability of initial information about the reliability of the elements, on the knowledge of all factors affecting the change in reliability, on the readiness of the analytical apparatus to describe the processes of damage accumulation and failures, and many other reasons. Ultimately, the choice of model is made by the researcher.

3. BASIC MATHEMATICAL MODELS MOST FREQUENTLY USED IN RELIABILITY CALCULATIONS

3.1. Weibull distribution

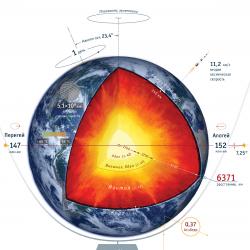

The operating experience of very many electronic devices and a significant amount of electromechanical equipment shows that they are characterized by three types of dependences of the failure rate on time (Fig. 3.1), corresponding to three periods of the life of these devices.

It is easy to see that this figure is similar to Fig. 2.3, since the graph of the function l (t) corresponds to the Weibull law. These three types of dependences of the intensity of failures on time can be obtained using the two-parameter Weibull distribution for the probabilistic description of the random time to failure. According to this distribution, the probability density of the moment of failure

, (3.1)

where d - shape parameter (determined by selection as a result of processing experimental data, d > 0); l - scale parameter, .

The failure rate is determined by the expression

(3.2)

Probability of uptime

, (3.3)

and mean time to failure

. (3.4)

Note that with the parameter d = 1, the Weibull distribution becomes exponential, and at d = 2 - into the Rayleigh distribution.

For d< 1, the failure rate decreases monotonically (run-in period), and increases monotonically (wear period), see fig. 3.1. Therefore, by choosing the parameter d it is possible to obtain, on each of the three sections, such a theoretical curve l (t), which closely coincides with the experimental curve, and then the calculation of the required reliability indicators can be made on the basis of a known regularity.

The Weibull distribution is close enough for a number of mechanical objects (for example, ball bearings), it can be used for accelerated testing of objects in forced mode.

3.2. Exponential Distribution

As noted in sect. 3.1 The exponential distribution of the probability of failure-free operation is a special case of the Weibull distribution when the shape parameter d = 1. This distribution is one-parameter, that is, one parameter is enough to write the calculated expression l = const . For this law, the converse statement is also true: if the failure rate is constant, then the probability of failure-free operation as a function of time obeys the exponential law:

. (3.5)

Mean time of failure-free operation under the exponential law of distribution of the interval of failure-free operation is expressed by the formula:

. (3.6)

Replacing in expression (3.5) the quantity l value 1 / T 1 , we get . (3.7)

Thus, knowing the mean uptime T 1 (or constant failure rate l ), in the case of an exponential distribution, it is possible to find the probability of failure-free operation for the time interval from the moment the object is turned on to any given moment t.Note that the probability of failure-free operation on an interval exceeding the average time T 1 with an exponential distribution will be less than 0.368:

P (T 1) \u003d\u003d 0.368 (Fig. 3.2).

The duration of the period of normal operation before the onset of aging can be significantly less than T 1 , that is, the time interval for which the use of the exponential model is acceptable is often less than the average uptime calculated for this model. This can be easily justified using the variance of the uptime. As you know, if for a random variable t the probability density f(t) is given and the average value (mathematical expectation) T 1 is determined, then the variance of the uptime is found by the expression:

(3.8)

and for the exponential distribution, respectively, is equal to:

. (3.9)

After some transformations, we get:

. (3.10) Thus, the most probable values of operating time, grouped in the vicinity of T 1 , lie in the range, that is, in the range from t = 0 to t = 2T 1 . As you can see, the object can work out both a small period of time and time t = 2T 1 , keeping l = const. But the probability of failure-free operation on the 2T 1 interval is extremely low: .

It is important to note that if the object has worked, suppose the time t without refusal, saving l = const, then the further distribution of uptime will be the same as at the time of the first inclusion l = const.

Thus, turning off a healthy object at the end of the interval and turning it on again for the same interval many times will lead to a sawtooth curve (see Fig. 3.3).

Other distributions do not have the specified property. From the above, a seemingly paradoxical conclusion follows: since the device does not age (does not change its properties) over the entire time t, it is not advisable to carry out preventive maintenance or replacement of devices to prevent sudden failures that obey an exponential law. Of course, this conclusion does not contain any paradox, since the assumption of an exponential distribution of the uptime interval means that the device does not age. On the other hand, it is obvious that the longer the time for which the device is turned on, the more various random causes that can cause the device to fail. This is very important for the operation of devices, when it is necessary to choose the intervals at which preventive maintenance should be carried out in order to maintain high reliability of the device. This issue is considered in detail in the work.

The exponential distribution model is often used for a priori analysis, since it allows, with not very complex calculations, to obtain simple relationships for various options for the system being created. At the stage of a posteriori analysis (experimental data), the compliance of the exponential model with the test results should be checked. In particular, if during the processing of the test results it turns out that , then this is evidence of the exponentiality of the analyzed dependence.

In practice, it often happens that l№ const, however, and in this case it can be used for limited periods of time. This assumption is justified by the fact that for a limited period of time, the variable failure rate can be replaced without a large error by the average value:

l(t)"lcp(t) = const.

3.3. Rayleigh distribution

The probability density in Rayleigh's law (see Fig. 3.4) has the following form

¦ , (3.11)

where d* - Rayleigh distribution parameter (equal to the mode of this distribution ). It does not need to be confused with standard deviation: .

The failure rate is:

A characteristic feature of the Rayleigh distribution is the straight line of the graph l (t) starting from the origin.

The probability of non-failure operation of the object in this case is determined by the expression

. (3.12)

MTBF

. (3.13)

3.4. Normal distribution (Gaussian distribution)

normal law distribution is characterized by a probability density of the form

, (3.14)

where m x , s x - respectively, the mathematical expectation and the standard deviation of the random variable x.

When analyzing the reliability of electrical installations in the form of a random variable, in addition to time, the values of current, electrical voltage and other arguments often appear. The normal law is a two-parameter law, to write which you need to know m x and s x .

The probability of failure-free operation is determined by the formula

, (3.15)

and the failure rate - according to the formula

On fig. 3.5 curves are shown l (t), P(t) and ¦ (t) for the case s t<< m t , characteristic of elements used in automatic control systems.

This manual shows only the most common distribution laws of a random variable. A number of laws are known, which are also used in reliability calculations: gamma distribution, -distribution, Maxwell distribution, Erlang distribution, etc.

It should be noted that if the inequality s t<< m t is not met, then the truncated normal distribution should be used.

A reasonable choice of the type of practical distribution of time to failure requires a large number of failures with an explanation of the physical processes occurring in objects before failure.

In highly reliable elements of electrical installations, during operation or reliability tests, only a small part of the initially available objects fails. Therefore, the value of the numerical characteristics found as a result of processing the experimental data strongly depends on the type of expected distribution of time to failure. As shown in , with different laws of time to failure, the values of the mean time to failure, calculated from the same initial data, can differ by hundreds of times. Therefore, the issue of choosing a theoretical model for the distribution of time to failure should be given special attention with appropriate proof of the approximation of the theoretical and experimental distributions (see Section 8).

3.5. Examples of using distribution laws in reliability calculations

Let us determine the reliability indicators for the most commonly used distribution laws for the time of occurrence of failures.

3.5.1. Determination of Reliability Indicators with an Exponential Distribution Law

Example . Let the object have an exponential distribution of the time of occurrence of failures with the failure rate l \u003d 2.5 H 10 -5 1 / h.

It is required to calculate the main indicators of the reliability of a non-restorable object for t = 2000 hours.

Solution.

q (2000) = 1 - P(2000) = 1 - 0.9512 = 0.0488.- Using expression (2.5), the probability of failure-free operation in the time interval from 500 h to 2500 h, provided that the object has worked without failure for 500 h, is equal to

- MTBF

3.5.2. Determination of Reliability Measures in the Rayleigh Distribution

Example. Distribution parameter

d* = 100 h.It is required to determine for t = 50 h the values P(t), Q(t), l (t), T 1 .

Solution.

Using formulas (3.11), (3.12), (3.13), we obtain

3.5.3. Determination of scheme indicators in the Gaussian distribution

Example. The electrical circuit is assembled from three standard resistors connected in series: ;

(in %, the value of the deviation of resistance from the nominal value is set).

It is required to determine the total resistance of the circuit, taking into account the deviations of the parameters of the resistors.

Solution.

It is known that in the mass production of the same type of elements, the distribution density of their parameters obeys the normal law. Using Rule 3 s (three sigma), we determine from the initial data the ranges in which the resistance values of the resistors lie: ;

Consequently,

When the element parameter values are normally distributed, and the elements are randomly selected when creating the circuit, the resulting value of R e is a functional variable, also distributed according to the normal law, and the dispersion of the resulting value, in our case, is determined by the expression

Since the resulting value of R e distributed according to the normal law, then, using the rule 3 s , write

where are the nominal passport parameters of the resistors.

In this way

Or

This example shows that as the number of series-connected elements increases, the resulting error decreases. In particular, if the total error of all individual elements is equal to± 600 Ohm, then the total resulting error is± 374 ohm. In more complex circuits, for example, in oscillatory circuits consisting of inductances and capacitances, the deviation of the inductance or capacitance from the given parameters is associated with a change in the resonant frequency, and the possible range of its change can be provided by a method similar to the calculation of resistors.

3.5.4. An example of determining the reliability indicators of a non-repairable object according to experimental data

Example. The test included N o = 1000 samples of the same type of non-recoverable equipment, failures were recorded every 100 hours.

It is required to determine in the time interval from 0 to 1500 hours. The number of failures in the corresponding interval is presented in Table. 3.1.

Table 3.1

Initial data and calculation results

| Number i-th interval | ,h | PCS. | .1/h | |

| 1 | 0 -100 | 50 | 0,950 | |

| 2 | 100 -200 | 40 | 0,910 | 0,430 |

| 3 | 200 -300 | 32 | 0,878 | 0,358 |

| 4 | 300 - 400 | 25 | 0,853 | 0,284 |

| 5 | 400 - 500 | 20 | 0,833 | 0,238 |

| 6 | 500 - 600 | 17 | 0,816 | 0,206 |

| 7 | 600 -700 | 16 | 0,800 | 0,198 |

| 8 | 700 - 800 | 16 | 0,784 | 0,202 |

| 9 | 800 - 900 | 15 | 0,769 | 0,193 |

| 10 | 900 -1000 | 14 | 0,755 | 0,184 |

| 11 | 1000 -1100 | 15 | 0,740 | 0,200 |

| 12 | 1100 -1200 | 14 | 0,726 | 0,191 |

| 13 | 1200 -1300 | 14 | 0,712 | 0,195 |

| 14 | 1300 -1400 | 13 | 0,699 | 0,184 |

| 15 | 1400 -1500 | 14 | 0,685 | 0.202 h |

Solution..

The mean time to failure, subject to failures of all N o objects, is determined by the expression

, where tj is the failure time of the j-th object (j takes values from 0 to Nо). In this experiment, out of N o = 1000 objects, all objects failed. Therefore, according to the experimental data obtained, only an approximate value of the mean time to failure can be found. In accordance with the task, we use the formula from: for r J N o, (3.16)

where tj is the time to failure of the j-th object (j takes the values

from 1 to r); r is the number of recorded failures (in our case, r = 315); tr - operating time to the r-th (last) failure. It can be seen from the graph that after the running-in period tі

600 h, the failure rate becomes constant. Assuming that in the future l is constant, then the period of normal operation is associated with the exponential model of time to failure of the tested type of objects. Then the mean time to failure

h.

Thus, from two estimates of mean time to failure

= 3831 h and T 1 = 5208 h, you must choose the one that is more consistent with the actual distribution of failures. In this case, it can be assumed that if all objects were tested to failure, that is, r = N o, to complete the graph of Fig. 3.6 and identify the time when l starts to increase, then for the interval of normal operation ( l = const) should take the mean time to failure T 1 = 5208 hours.

In conclusion, for this example, we note that the definition of the mean time to failure by formula (2.7), when r<< N о, дает грубую ошибку. В нашем примере

h.

If instead of N o we put the number of failed objects

r = 315, then we get

h.

In the latter case, objects that did not fail during the test in the amount of N about - r \u003d 1000-315 \u003d 685 pcs. in general, they were not included in the assessment, that is, the average time to failure was determined only for 315 objects. These errors are quite common in practical calculations.

This distribution is most often used in the study of failure rates for burn-in and aging periods. On the example of the distribution of the service life of the insulation of some elements of the electrical network, the physical processes leading to aging and insulation failure and described by the Weibull distribution are considered in detail.

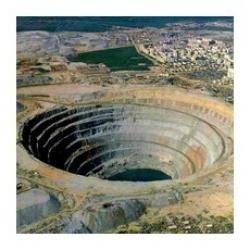

The reliability of the most common elements of electrical networks, such as power transformers and cable lines, is largely determined by the reliability of the insulation, the "strength" of which changes during operation. The main characteristic of the insulation of electromechanical products is its electrical strength, which, depending on the operating conditions and type of product, is determined by mechanical strength, elasticity, which excludes the formation of residual deformations, cracks, delaminations under the influence of mechanical loads, i.e. inhomogeneities.

The homogeneity and solidity of the insulation structure and its high thermal conductivity exclude the occurrence of increased local heating, which inevitably leads to an increase in the degree of inhomogeneity of the electrical strength. The destruction of insulation during the operation of the element occurs mainly as a result of heating by load currents and temperature effects of the external environment.

Considering the two main factors (thermal aging and mechanical stress) that affect the life of the insulation, which are also closely related, we can conclude that both the fatigue phenomena in the insulation and its thermal aging largely depend on the quality of manufacture. and the material of the electrical product, from the homogeneity of the insulation material, which ensures the absence of local heating (since it is difficult to assume that all insulation will fail, i.e. breakdown will occur over the entire insulation area).

Microcracks, delaminations and other inhomogeneities of the material are randomly distributed in relation to their position and size throughout the entire volume (area) of the insulation. Under the influence of variable unfavorable conditions of both thermal and electrodynamic nature, the inhomogeneities of the material increase: for example, a microcrack propagates deep into the insulation and, if the voltage is accidentally increased, can cause insulation breakdown. The reason for failure can be even a slight inhomogeneity of the material.

It is natural to assume that the number of adverse effects (thermal or electromechanical) that cause insulation breakdown is a function that decreases depending on the size of the inhomogeneity. This number is minimal for the largest inhomogeneity (cracks, delaminations, etc.).

Therefore, the number of adverse effects that determine the service life of the insulation must obey the law of distribution of the minimum random variable from a set of independent random variables corresponding to inhomogeneities of different sizes:

where Г and - the time of failure-free operation of the entire insulation; T and, - uptime of the / "-th section (/" \u003d 1.2, P).

Thus, in order to determine the distribution law for the uptime of such an object as the insulation of an electrical network element, it is necessary to find the distribution law for the minimum uptime for the totality of all sections. Of greatest interest is the case when the laws of distribution of uptime of individual sections have a different character, but the form of distribution laws is the same, i.e. there are no pronounced differences between the regions.

From the standpoint of reliability, sections of such a system correspond to a serial connection. The distribution function of the uptime of such a system from P plots connected in series:

Consider the general case where the distribution P(g) has a so-called "sensitivity threshold", i.e. the element is guaranteed not to fail in the time interval (0, /o) (in a special case, /o can be equal to 0). It is obvious that the function R(1c + D/) > 0 is always a non-decreasing function of the argument.

For the system, you can get the asymptotic law of the distribution of uptime:

If the distribution does not have a sensitivity threshold / 0 , then the distribution law will have the form

where With- some constant coefficient, With> 0; a is the Weibull exponent.

This law is called Weibull distribution. It is quite often used in approximating the distribution of uptime for a system with a finite number of series (in terms of reliability) connected elements (extended cable lines with a significant number of couplings, etc.).

Uptime Distribution Density

When a \u003d 1, the distribution density turns into an ordinary exponential function (Fig. 3.3).

For the failure rate at the distribution density according to the Weibull law, we obtain

The failure rate for this law, depending on the distribution parameter a, can grow, remain constant (exponential law) and decrease (Fig. 3.4).

For a = 2, the distribution function of the uptime coincides with the Rayleigh law, and for a » 1, it is fairly well approximated by the normal distribution law in the vicinity of the mean uptime.

Rice. 3.3.

Rice. 3.4.

As can be seen from fig. 3.3 and 3.4, the exponential distribution law is a special case of the Weibull law for a = 1 (A. = const).

The Weibull law is very convenient for calculations, but it requires an empirical selection of the parameters A. and a for the existing dependence A. (/).

Mathematical expectation (mean time) of uptime and variance in the distribution according to the Weibull law:

where G(x) is the gamma function determined from the table G(.g) (see Appendix 2); With- some constant coefficient that determines the probability of occurrence to elementary damage on the time interval (0, /)