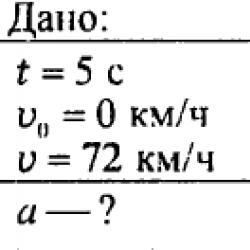

Gaussian method 4 equations. Solving systems of linear equations using the Gaussian method. Criteria for assessing the performance of independent work

Gauss method perfect for solving systems of linear algebraic equations (SLAEs). It has a number of advantages compared to other methods:

- firstly, there is no need to first examine the system of equations for consistency;

- secondly, the Gauss method can solve not only SLAEs in which the number of equations coincides with the number of unknown variables and the main matrix of the system is non-singular, but also systems of equations in which the number of equations does not coincide with the number of unknown variables or the determinant of the main matrix is equal to zero;

- thirdly, the Gaussian method leads to results with a relatively small number of computational operations.

Brief overview of the article.

First, we give the necessary definitions and introduce notations.

Next, we will describe the algorithm of the Gauss method for the simplest case, that is, for systems of linear algebraic equations, the number of equations in which coincides with the number of unknown variables and the determinant of the main matrix of the system is not equal to zero. When solving such systems of equations, the essence of the Gauss method is most clearly visible, which is the sequential elimination of unknown variables. Therefore, the Gaussian method is also called the method of sequential elimination of unknowns. We will show detailed solutions of several examples.

In conclusion, we will consider the solution by the Gauss method of systems of linear algebraic equations, the main matrix of which is either rectangular or singular. The solution to such systems has some features, which we will examine in detail using examples.

Page navigation.

Basic definitions and notations.

Consider a system of p linear equations with n unknowns (p can be equal to n):

Where are unknown variables, are numbers (real or complex), and are free terms.

If ![]() , then the system of linear algebraic equations is called homogeneous, otherwise - heterogeneous.

, then the system of linear algebraic equations is called homogeneous, otherwise - heterogeneous.

The set of values of unknown variables for which all equations of the system become identities is called decision of the SLAU.

If there is at least one solution to a system of linear algebraic equations, then it is called joint, otherwise - non-joint.

If a SLAE has a unique solution, then it is called certain. If there is more than one solution, then the system is called uncertain.

They say that the system is written in coordinate form, if it has the form

.

This system in matrix form records has the form , where  - the main matrix of the SLAE, - the matrix of the column of unknown variables, - the matrix of free terms.

- the main matrix of the SLAE, - the matrix of the column of unknown variables, - the matrix of free terms.

If we add a matrix-column of free terms to matrix A as the (n+1)th column, we get the so-called extended matrix systems of linear equations. Typically, an extended matrix is denoted by the letter T, and the column of free terms is separated by a vertical line from the remaining columns, that is,

The square matrix A is called degenerate, if its determinant is zero. If , then matrix A is called non-degenerate.

The following point should be noted.

If you perform the following actions with a system of linear algebraic equations

- swap two equations,

- multiply both sides of any equation by an arbitrary and non-zero real (or complex) number k,

- to both sides of any equation add the corresponding parts of another equation, multiplied by an arbitrary number k,

then you get an equivalent system that has the same solutions (or, just like the original one, has no solutions).

For an extended matrix of a system of linear algebraic equations, these actions will mean carrying out elementary transformations with the rows:

- swapping two lines,

- multiplying all elements of any row of matrix T by a nonzero number k,

- adding to the elements of any row of a matrix the corresponding elements of another row, multiplied by an arbitrary number k.

Now we can proceed to the description of the Gauss method.

Solving systems of linear algebraic equations, in which the number of equations is equal to the number of unknowns and the main matrix of the system is non-singular, using the Gauss method.

What would we do at school if we were given the task of finding a solution to a system of equations?  .

.

Some would do that.

Note that by adding the left side of the first to the left side of the second equation, and the right side to the right side, you can get rid of the unknown variables x 2 and x 3 and immediately find x 1:

We substitute the found value x 1 =1 into the first and third equations of the system:

If we multiply both sides of the third equation of the system by -1 and add them to the corresponding parts of the first equation, we get rid of the unknown variable x 3 and can find x 2:

We substitute the resulting value x 2 = 2 into the third equation and find the remaining unknown variable x 3:

Others would have done differently.

Let us resolve the first equation of the system with respect to the unknown variable x 1 and substitute the resulting expression into the second and third equations of the system in order to exclude this variable from them:

Now let’s solve the second equation of the system for x 2 and substitute the result obtained into the third equation to eliminate the unknown variable x 2 from it:

From the third equation of the system it is clear that x 3 =3. From the second equation we find ![]() , and from the first equation we get .

, and from the first equation we get .

Familiar solutions, right?

The most interesting thing here is that the second solution method is essentially the method of sequential elimination of unknowns, that is, the Gaussian method. When we expressed the unknown variables (first x 1, at the next stage x 2) and substituted them into the remaining equations of the system, we thereby excluded them. We carried out elimination until there was only one unknown variable left in the last equation. The process of sequentially eliminating unknowns is called direct Gaussian method. After completing the forward move, we have the opportunity to calculate the unknown variable found in the last equation. With its help, we find the next unknown variable from the penultimate equation, and so on. The process of sequentially finding unknown variables while moving from the last equation to the first is called inverse of the Gaussian method.

It should be noted that when we express x 1 in terms of x 2 and x 3 in the first equation, and then substitute the resulting expression into the second and third equations, the following actions lead to the same result:

Indeed, such a procedure also makes it possible to eliminate the unknown variable x 1 from the second and third equations of the system:

Nuances with the elimination of unknown variables using the Gaussian method arise when the equations of the system do not contain some variables.

For example, in SLAU  in the first equation there is no unknown variable x 1 (in other words, the coefficient in front of it is zero). Therefore, we cannot solve the first equation of the system for x 1 in order to eliminate this unknown variable from the remaining equations. The way out of this situation is to swap the equations of the system. Since we are considering systems of linear equations whose determinants of the main matrices are different from zero, there is always an equation in which the variable we need is present, and we can rearrange this equation to the position we need. For our example, it is enough to swap the first and second equations of the system

in the first equation there is no unknown variable x 1 (in other words, the coefficient in front of it is zero). Therefore, we cannot solve the first equation of the system for x 1 in order to eliminate this unknown variable from the remaining equations. The way out of this situation is to swap the equations of the system. Since we are considering systems of linear equations whose determinants of the main matrices are different from zero, there is always an equation in which the variable we need is present, and we can rearrange this equation to the position we need. For our example, it is enough to swap the first and second equations of the system  , then you can resolve the first equation for x 1 and exclude it from the remaining equations of the system (although x 1 is no longer present in the second equation).

, then you can resolve the first equation for x 1 and exclude it from the remaining equations of the system (although x 1 is no longer present in the second equation).

We hope you get the gist.

Let's describe Gaussian method algorithm.

Suppose we need to solve a system of n linear algebraic equations with n unknown variables of the form  , and let the determinant of its main matrix be different from zero.

, and let the determinant of its main matrix be different from zero.

We will assume that , since we can always achieve this by rearranging the equations of the system. Let's eliminate the unknown variable x 1 from all equations of the system, starting with the second. To do this, to the second equation of the system we add the first, multiplied by , to the third equation we add the first, multiplied by , and so on, to the nth equation we add the first, multiplied by . The system of equations after such transformations will take the form

where and  .

.

We would have arrived at the same result if we had expressed x 1 in terms of other unknown variables in the first equation of the system and substituted the resulting expression into all other equations. Thus, the variable x 1 is excluded from all equations, starting from the second.

Next, we proceed in a similar way, but only with part of the resulting system, which is marked in the figure

To do this, to the third equation of the system we add the second, multiplied by , to the fourth equation we add the second, multiplied by , and so on, to the nth equation we add the second, multiplied by . The system of equations after such transformations will take the form

where and  . Thus, the variable x 2 is excluded from all equations, starting from the third.

. Thus, the variable x 2 is excluded from all equations, starting from the third.

Next, we proceed to eliminating the unknown x 3, while we act similarly with the part of the system marked in the figure

So we continue the direct progression of the Gaussian method until the system takes the form

From this moment we begin the reverse of the Gaussian method: we calculate x n from the last equation as , using the obtained value of x n we find x n-1 from the penultimate equation, and so on, we find x 1 from the first equation.

Let's look at the algorithm using an example.

Example.

Gauss method.

Gauss method.

Solution.

The coefficient a 11 is non-zero, so let’s proceed to the direct progression of the Gaussian method, that is, to the exclusion of the unknown variable x 1 from all equations of the system except the first. To do this, to the left and right sides of the second, third and fourth equations, add the left and right sides of the first equation, multiplied by , respectively.  And :

And :

The unknown variable x 1 has been eliminated, let's move on to eliminating x 2 . To the left and right sides of the third and fourth equations of the system we add the left and right sides of the second equation, multiplied by respectively  And

And  :

:

To complete the forward progression of the Gaussian method, we need to eliminate the unknown variable x 3 from the last equation of the system. Let us add to the left and right sides of the fourth equation, respectively, the left and right sides of the third equation, multiplied by  :

:

You can begin the reverse of the Gaussian method.

From the last equation we have  ,

,

from the third equation we get,

from the second,

from the first one.

To check, you can substitute the obtained values of the unknown variables into the original system of equations. All equations turn into identities, which indicates that the solution using the Gauss method was found correctly.

Answer:

Now let’s give a solution to the same example using the Gaussian method in matrix notation.

Example.

Find the solution to the system of equations  Gauss method.

Gauss method.

Solution.

The extended matrix of the system has the form  . At the top of each column are the unknown variables that correspond to the elements of the matrix.

. At the top of each column are the unknown variables that correspond to the elements of the matrix.

The direct approach of the Gaussian method here involves reducing the extended matrix of the system to a trapezoidal form using elementary transformations. This process is similar to the elimination of unknown variables that we did with the system in coordinate form. Now you will see this.

Let's transform the matrix so that all elements in the first column, starting from the second, become zero. To do this, to the elements of the second, third and fourth lines we add the corresponding elements of the first line multiplied by ,  and accordingly:

and accordingly:

Next, we transform the resulting matrix so that in the second column all elements, starting from the third, become zero. This would correspond to eliminating the unknown variable x 2 . To do this, to the elements of the third and fourth rows we add the corresponding elements of the first row of the matrix, multiplied by respectively  And

And  :

:

It remains to exclude the unknown variable x 3 from the last equation of the system. To do this, to the elements of the last row of the resulting matrix we add the corresponding elements of the penultimate row, multiplied by  :

:

It should be noted that this matrix corresponds to a system of linear equations

which was obtained earlier after a forward move.

It's time to turn back. In matrix notation, the inverse of the Gaussian method involves transforming the resulting matrix such that the matrix marked in the figure

became diagonal, that is, took the form

where are some numbers.

These transformations are similar to the forward transformations of the Gaussian method, but are performed not from the first line to the last, but from the last to the first.

Add to the elements of the third, second and first lines the corresponding elements of the last line, multiplied by  , on and on

, on and on  respectively:

respectively:

Now add to the elements of the second and first lines the corresponding elements of the third line, multiplied by and by, respectively:

At the last step of the reverse Gaussian method, to the elements of the first row we add the corresponding elements of the second row, multiplied by:

The resulting matrix corresponds to the system of equations  , from where we find the unknown variables.

, from where we find the unknown variables.

Answer:

NOTE.

When using the Gauss method to solve systems of linear algebraic equations, approximate calculations should be avoided, as this can lead to completely incorrect results. We recommend not rounding decimals. It is better to move from decimal fractions to ordinary fractions.

Example.

Solve a system of three equations using the Gauss method  .

.

Solution.

Note that in this example the unknown variables have a different designation (not x 1, x 2, x 3, but x, y, z). Let's move on to ordinary fractions:

Let us exclude the unknown x from the second and third equations of the system:

In the resulting system, the unknown variable y is absent in the second equation, but y is present in the third equation, therefore, let’s swap the second and third equations:

This completes the direct progression of the Gauss method (there is no need to exclude y from the third equation, since this unknown variable no longer exists).

Let's start the reverse move.

From the last equation we find  ,

,

from the penultimate

from the first equation we have

Answer:

X = 10, y = 5, z = -20.

Solving systems of linear algebraic equations in which the number of equations does not coincide with the number of unknowns or the main matrix of the system is singular, using the Gauss method.

Systems of equations, the main matrix of which is rectangular or square singular, may have no solutions, may have a single solution, or may have an infinite number of solutions.

Now we will understand how the Gauss method allows us to establish the compatibility or inconsistency of a system of linear equations, and in the case of its compatibility, determine all solutions (or one single solution).

In principle, the process of eliminating unknown variables in the case of such SLAEs remains the same. However, it is worth going into detail about some situations that may arise.

Let's move on to the most important stage.

So, let us assume that the system of linear algebraic equations, after completing the forward progression of the Gauss method, takes the form  and not a single equation was reduced to (in this case we would conclude that the system is incompatible). A logical question arises: “What to do next”?

and not a single equation was reduced to (in this case we would conclude that the system is incompatible). A logical question arises: “What to do next”?

Let us write down the unknown variables that come first in all equations of the resulting system:

In our example these are x 1, x 4 and x 5. On the left sides of the equations of the system we leave only those terms that contain the written unknown variables x 1, x 4 and x 5, the remaining terms are transferred to the right side of the equations with the opposite sign:

Let's give the unknown variables that are on the right sides of the equations arbitrary values, where ![]() - arbitrary numbers:

- arbitrary numbers:

After this, the right-hand sides of all equations of our SLAE contain numbers and we can proceed to the reverse of the Gaussian method.

From the last equation of the system we have, from the penultimate equation we find, from the first equation we get

The solution to a system of equations is a set of values of unknown variables

Giving Numbers ![]() different values, we will obtain different solutions to the system of equations. That is, our system of equations has infinitely many solutions.

different values, we will obtain different solutions to the system of equations. That is, our system of equations has infinitely many solutions.

Answer:

Where ![]() - arbitrary numbers.

- arbitrary numbers.

To consolidate the material, we will analyze in detail the solutions of several more examples.

Example.

Solve a homogeneous system of linear algebraic equations  Gauss method.

Gauss method.

Solution.

Let us exclude the unknown variable x from the second and third equations of the system. To do this, to the left and right sides of the second equation, we add, respectively, the left and right sides of the first equation, multiplied by , and to the left and right sides of the third equation, we add the left and right sides of the first equation, multiplied by:

Now let’s exclude y from the third equation of the resulting system of equations:

The resulting SLAE is equivalent to the system  .

.

We leave on the left side of the system equations only the terms containing the unknown variables x and y, and move the terms with the unknown variable z to the right side:

Carl Friedrich Gauss - German mathematician, founder of the method of solving SLAEs of the same name

Carl Friedrich Gauss was a famous great mathematician and at one time he was recognized as the “King of Mathematics”. Although the name "Gauss's method" is generally accepted, Gauss is not its author: Gauss's method was known long before him. Its first description is in the Chinese treatise “Mathematics in Nine Books,” which was compiled between the 2nd century. BC e. and I century. n. e. and is a compilation of earlier works written around the 10th century. BC e.

– consistent exclusion of unknowns. This method is used to solve quadratic systems of linear algebraic equations. Although equations can be easily solved using the Gauss method, students often cannot find the correct solution because they are confused about the signs (pluses and minuses). Therefore, when solving SLAEs, you need to be extremely careful and only then can you easily, quickly and correctly solve even the most complex equation.

Systems of linear algebraic equations have several advantages: the equation does not have to be consistent in advance; it is possible to solve systems of equations in which the number of equations does not coincide with the number of unknown variables or the determinant of the main matrix is equal to zero; It is possible to use the Gaussian method to achieve results with a relatively small number of computational operations.

As already mentioned, the Gaussian method causes some difficulties for students. However, if you learn the method and solution algorithm, you will immediately understand the intricacies of the solution.

First, let's systematize knowledge about systems of linear equations.

Note!

Depending on its elements, an SLAE can have:

- One solution;

- many solutions;

- have no solutions at all.

In the first two cases, the SLAE is called compatible, and in the third case, it is called incompatible. If a system has one solution, it is called definite, and if there is more than one solution, then the system is called indefinite.

Gauss method - theorem, examples of solutions updated: November 22, 2019 by: Scientific Articles.Ru

One of the simplest ways to solve a system of linear equations is a technique based on the calculation of determinants ( Cramer's rule). Its advantage is that it allows you to immediately record the solution; it is especially convenient in cases where the coefficients of the system are not numbers, but some parameters. Its disadvantage is the cumbersomeness of calculations in the case of a large number of equations; moreover, Cramer's rule is not directly applicable to systems in which the number of equations does not coincide with the number of unknowns. In such cases, it is usually used Gaussian method.

Systems of linear equations having the same set of solutions are called equivalent. Obviously, the set of solutions of a linear system will not change if any equations are swapped, or if one of the equations is multiplied by some non-zero number, or if one equation is added to another.

Gauss method (method of sequential elimination of unknowns) is that with the help of elementary transformations the system is reduced to an equivalent system of a step type. First, using the 1st equation, we eliminate x 1 of all subsequent equations of the system. Then, using the 2nd equation, we eliminate x 2 from the 3rd and all subsequent equations. This process, called direct Gaussian method, continues until there is only one unknown left on the left side of the last equation x n. After this it is done inverse of the Gaussian method– solving the last equation, we find x n; after that, using this value, from the penultimate equation we calculate x n–1, etc. We find the last one x 1 from the first equation.

It is convenient to carry out Gaussian transformations by performing transformations not with the equations themselves, but with the matrices of their coefficients. Consider the matrix:

called extended matrix of the system, because, in addition to the main matrix of the system, it includes a column of free terms. The Gaussian method is based on reducing the main matrix of the system to a triangular form (or trapezoidal form in the case of non-square systems) using elementary row transformations (!) of the extended matrix of the system.

Example 5.1. Solve the system using the Gaussian method:

Solution. Let's write out the extended matrix of the system and, using the first row, after that we will reset the remaining elements:

we get zeros in the 2nd, 3rd and 4th rows of the first column:

we get zeros in the 2nd, 3rd and 4th rows of the first column:

Now we need all elements in the second column below the 2nd row to be equal to zero. To do this, you can multiply the second line by –4/7 and add it to the 3rd line. However, in order not to deal with fractions, let's create a unit in the 2nd row of the second column and only

Now, to get a triangular matrix, you need to reset the element of the fourth row of the 3rd column; to do this, you can multiply the third row by 8/54 and add it to the fourth. However, in order not to deal with fractions, we will swap the 3rd and 4th rows and the 3rd and 4th columns and only after that we will reset the specified element. Note that when rearranging the columns, the corresponding variables change places and this must be remembered; other elementary transformations with columns (addition and multiplication by a number) cannot be performed!

The last simplified matrix corresponds to a system of equations equivalent to the original one:

From here, using the inverse of the Gaussian method, we find from the fourth equation x 3 = –1; from the third x 4 = –2, from the second x 2 = 2 and from the first equation x 1 = 1. In matrix form, the answer is written as

We considered the case when the system is definite, i.e. when there is only one solution. Let's see what happens if the system is inconsistent or uncertain.

Example 5.2. Explore the system using the Gaussian method:

Solution. We write out and transform the extended matrix of the system

We write a simplified system of equations:

Here, in the last equation it turned out that 0=4, i.e. contradiction. Consequently, the system has no solution, i.e. she incompatible. à

Example 5.3. Explore and solve the system using the Gaussian method:

Solution. We write out and transform the extended matrix of the system:

As a result of the transformations, the last line contains only zeros. This means that the number of equations has decreased by one:

Thus, after simplifications, there are two equations left, and four unknowns, i.e. two unknown "extra". Let them be "superfluous", or, as they say, free variables, will x 3 and x 4 . Then

Believing x 3 = 2a And x 4 = b, we get x 2 = 1–a And x 1 = 2b–a; or in matrix form

A solution written in this way is called general, because, giving parameters a And b different values, all possible solutions of the system can be described. a

In this article we:

- Let's define the Gaussian method,

- Let's analyze the algorithm of actions for solving linear equations, where the number of equations coincides with the number of unknown variables, and the determinant is not equal to zero;

- Let us analyze the algorithm of actions for solving SLAEs with a rectangular or singular matrix.

Gaussian method - what is it?

Definition 1Gauss method is a method that is used in solving systems of linear algebraic equations and has the following advantages:

- there is no need to check the system of equations for consistency;

- It is possible to solve systems of equations where:

- the number of determinants coincides with the number of unknown variables;

- the number of determinants does not coincide with the number of unknown variables;

- the determinant is zero.

- the result is produced with a relatively small number of computational operations.

Basic definitions and notations

Example 1There is a system of p linear equations with n unknowns (p can be equal to n):

a 11 x 1 + a 12 x 2 + . . . + a 1 n x n = b 1 a 21 x 1 + a 22 x 2 + . . . + a 2 n x n = b 2 ⋯ a p 1 x 1 + a p 2 x 2 + . . . + a p n x n = b p ,

where x 1 , x 2 , . . . . , x n - unknown variables, a i j, i = 1, 2. . . , p , j = 1 , 2 . . . , n - numbers (real or complex), b 1 , b 2 , . . . , b n - free terms.

Definition 2

If b 1 = b 2 = . . . = b n = 0, then such a system of linear equations is called homogeneous, if vice versa - heterogeneous.

Definition 3

SLAE solution - set of values of unknown variables x 1 = a 1, x 2 = a 2, . . . , x n = a n , at which all equations of the system become identical to each other.

Definition 4

Joint SLAU - a system for which there is at least one solution option. Otherwise, it is called inconsistent.

Definition 5

Defined SLAU - This is a system that has a unique solution. If there is more than one solution, then such a system will be called uncertain.

Definition 6

Coordinate type of record:

a 11 x 1 + a 12 x 2 + . . . + a 1 n x n = b 1 a 21 x 1 + a 22 x 2 + . . . + a 2 n x n = b 2 ⋯ a p 1 x 1 + a p 2 x 2 + . . . + a p n x n = b p

Definition 7

Matrix notation: A X = B, where

A = a 11 a 12 ⋯ a 1 n a 21 a 22 ⋯ a 2 n ⋯ ⋯ ⋯ ⋯ a p 1 a p 2 ⋯ a p n - the main matrix of the SLAE;

X = x 1 x 2 ⋮ x n - column matrix of unknown variables;

B = b 1 b 2 ⋮ b n - matrix of free terms.

Definition 8

Extended Matrix - a matrix that is obtained by adding a matrix-column of free terms as an (n + 1) column and is designated T.

T = a 11 a 12 ⋮ a 1 n b 1 a 21 a 22 ⋮ a 2 n b 2 ⋮ ⋮ ⋮ ⋮ ⋮ a p 1 a p 2 ⋮ a p n b n

Definition 9

Singular square matrix A - a matrix whose determinant is equal to zero. If the determinant is not equal to zero, then such a matrix is then called non-degenerate.

Description of the algorithm for using the Gaussian method to solve SLAEs with an equal number of equations and unknowns (reverse and forward progression of the Gaussian method)

First, let's look at the definitions of forward and backward moves of the Gaussian method.

Definition 10

Forward Gaussian move - the process of sequential elimination of unknowns.

Definition 11

Gaussian reversal - the process of sequentially finding unknowns from the last equation to the first.

Gauss method algorithm:

Example 2

We solve a system of n linear equations with n unknown variables:

a 11 x 1 + a 12 x 2 + a 13 x 3 + . . . + a 1 n x n = b 1 a 21 x 1 + a 22 x 2 + a 23 x 3 + . . . + a 2 n x n = b 2 a 31 x 1 + a 32 x 2 + a 33 x 3 + . . . + a 3 n x n = b 3 ⋯ a n 1 x 1 + a n 2 x 2 + a n 3 x 3 + . . . + a n n x n = b n

Matrix determinant not equal to zero .

- a 11 is not equal to zero - this can always be achieved by rearranging the equations of the system;

- we exclude the variable x 1 from all equations of the system, starting from the second;

- Let's add to the second equation of the system the first one, which is multiplied by - a 21 a 11, add to the third equation the first one multiplied by - a 21 a 11, etc.

After these steps, the matrix will take the form:

a 11 x 1 + a 12 x 2 + a 13 x 3 + . . . + a 1 n x n = b 1 a (1) 22 x 2 + a (1) 23 x 3 + . . . + a (1) 2 n x n = b (1) 2 a (1) 32 x 2 + a (1) 33 x 3 + . . . + a (1) 3 n x n = b (1) 3 ⋯ a (1) n 2 x 2 + a (1) n 3 x 3 + . . . + a (1) n n x n = b (1) n ,

where a i j (1) = a i j + a 1 j (- a i 1 a 11), i = 2, 3, . . . , n , j = 2 , 3 , . . . , n , b i (1) = b i + b 1 (- a i 1 a 11) , i = 2 , 3 , . . . , n.

a 11 x 1 + a 12 x 2 + a 13 x 3 + . . . + a 1 n x n = b 1 a (1) 22 x 2 + a (1) 23 x 3 + . . . + a (1) 2 n x n = b (1) 2 a (1) 32 x 2 + a (1) 33 x 3 + . . . + a (1) 3 n x n = b (1) 3 ⋯ a (1) n 2 x 2 + a (1) n 3 x 3 + . . . + a (1) n n x n = b (1) n

It is believed that a 22 (1) is not equal to zero. Thus, we proceed to eliminating the unknown variable x 2 from all equations, starting with the third:

- to the third equation of the system we add the second, which is multiplied by - a (1) 42 a (1) 22 ;

- to the fourth we add the second, which is multiplied by - a (1) 42 a (1) 22, etc.

After such manipulations, the SLAE has next view :

a 11 x 1 + a 12 x 2 + a 13 x 3 + . . . + a 1 n x n = b 1 a (1) 22 x 2 + a (1) 23 x 3 + . . . + a (1) 2 n x n = b (1) 2 a (2) 33 x 3 + . . . + a (2) 3 n x n = b (2) 3 ⋯ a (2) n 3 x 3 + . . . + a (2) n n x n = b (2) n ,

where a i j (2) = a (1) i j + a 2 j (- a (1) i 2 a (1) 22), i = 3, 4, . . . , n , j = 3 , 4 , . . . , n , b i (2) = b (1) i + b (1) 2 (- a (1) i 2 a (1) 22) , i = 3 , 4 , . . . , n. .

Thus, the variable x 2 is excluded from all equations, starting from the third.

a 11 x 1 + a 12 x 2 + a 13 x 3 + . . . + a 1 n x n = b 1 a (1) 22 x 2 + a (1) 23 x 3 + . . . + a (1) 2 n x n = b (1) 2 a (2) 33 x 3 + . . . + a (2) 3 n x n = b (2) 3 ⋯ a (n - 1) n n x n = b (n - 1) n

Note

Once the system has taken this form, you can begin inverse of the Gaussian method :

- calculate x n from the last equation as x n = b n (n - 1) a n n (n - 1) ;

- using the resulting x n, we find x n - 1 from the penultimate equation, etc., find x 1 from the first equation.

Example 3

Find a solution to the system of equations using the Gauss method:

How to decide?

The coefficient a 11 is different from zero, so we proceed to the direct solution, i.e. to the exclusion of the variable x 11 from all equations of the system except the first. In order to do this, we add to the left and right sides of the 2nd, 3rd and 4th equations the left and right sides of the first, which are multiplied by - a 21 a 11:

1 3, - a 31 a 11 = - - 2 3 = 2 3 and - a 41 a 11 = - 1 3.

3 x 1 + 2 x 2 + x 3 + x 4 = - 2 x 1 - x 2 + 4 x 3 - x 4 = - 1 - 2 x 1 - 2 x 2 - 3 x 3 + x 4 = 9 x 1 + 5 x 2 - x 3 + 2 x 4 = 4 ⇔

⇔ 3 x 1 + 2 x 2 + x 3 + x 4 = - 2 x 1 - x 2 + 4 x 3 - x 4 + (- 1 3) (3 x 1 + 2 x 2 + x 3 + x 4) = - 1 + (- 1 3) (- 2) - 2 x 1 - 2 x 2 - 3 x 3 + x 4 + 2 3 (3 x 1 + 2 x 2 + x 3 + x 4) = 9 + 2 3 (- 2) x 1 + 5 x 2 - x 3 + 2 x 4 + (- 1 3) (3 x 1 + 2 x 2 + x 3 + x 4) = 4 + (- 1 3) (- 2 ) ⇔

⇔ 3 x 1 + 2 x 2 + x 3 + x 4 = - 2 - 5 3 x 2 + 11 3 x 3 - 4 3 x 4 = - 1 3 - 2 3 x 2 - 7 3 x 3 + 5 3 x 4 = 23 3 13 3 x 2 - 4 3 x 3 + 5 3 x 4 = 14 3

We have eliminated the unknown variable x 1, now we proceed to eliminate the variable x 2:

A 32 (1) a 22 (1) = - - 2 3 - 5 3 = - 2 5 and a 42 (1) a 22 (1) = - 13 3 - 5 3 = 13 5:

3 x 1 + 2 x 2 + x 3 + x 4 = - 2 - 5 3 x 2 + 11 3 x 3 - 4 3 x 4 = - 1 3 - 2 3 x 2 - 7 3 x 3 + 5 3 x 4 = 23 3 13 3 x 2 - 4 3 x 3 + 5 3 x 4 = 14 3 ⇔

⇔ 3 x 1 + 2 x 2 + x 3 + x 4 = - 2 - 5 3 x 2 + 11 3 x 3 - 4 3 x 4 = - 1 3 - 2 3 x 2 - 7 3 x 3 + 5 3 x 4 + (- 2 5) (- 5 3 x 2 + 11 3 x 3 - 4 3 x 4) = 23 3 + (- 2 5) (- 1 3) 13 3 x 2 - 4 3 x 3 + 5 3 x 4 + 13 5 (- 5 3 x 2 + 11 3 x 3 - 4 3 x 4) = 14 3 + 13 5 (- 1 3) ⇔

⇔ 3 x 1 + 2 x 2 + x 3 + x 4 = - 2 - 5 3 x 2 + 11 3 x 3 - 4 3 x 4 = - 1 3 - 19 5 x 3 + 11 5 x 4 = 39 5 41 5 x 3 - 9 5 x 4 = 19 5

In order to complete the forward progression of the Gaussian method, it is necessary to exclude x 3 from the last equation of the system - a 43 (2) a 33 (2) = - 41 5 - 19 5 = 41 19:

3 x 1 + 2 x 2 + x 3 + x 4 = - 2 - 5 3 x 2 + 11 3 x 3 - 4 3 x 4 = - 1 3 - 19 5 x 3 + 11 5 x 4 = 39 5 41 5 x 3 - 9 5 x 4 = 19 5 ⇔

3 x 1 + 2 x 2 + x 3 + x 4 = - 2 - 5 3 x 2 + 11 3 x 3 - 4 3 x 4 = - 1 3 - 19 5 x 3 + 11 5 x 4 = 39 5 41 5 x 3 - 9 5 x 4 + 41 19 (- 19 5 x 3 + 11 5 x 4) = 19 5 + 41 19 39 5 ⇔

⇔ 3 x 1 + 2 x 2 + x 3 + x 4 = - 2 - 5 3 x 2 + 11 3 x 3 - 4 3 x 4 = - 1 3 - 19 5 x 3 + 11 5 x 4 = 39 5 56 19 x 4 = 392 19

Reverse the Gaussian method:

- from the last equation we have: x 4 = 392 19 56 19 = 7;

- from the 3rd equation we get: x 3 = - 5 19 (39 5 - 11 5 x 4) = - 5 19 (39 5 - 11 5 × 7) = 38 19 = 2;

- from the 2nd: x 2 = - 3 5 (- 1 3 - 11 3 x 4 + 4 3 x 4) = - 3 5 (- 1 3 - 11 3 × 2 + 4 3 × 7) = - 1 ;

- from 1st: x 1 = 1 3 (- 2 - 2 x 2 - x 3 - x 4) = - 2 - 2 × (- 1) - 2 - 7 3 = - 9 3 = - 3 .

Answer : x 1 = - 3 ; x 2 = - 1 ; x 3 = 2 ; x 4 = 7

Example 4

Find a solution to the same example using the Gaussian method in matrix notation:

3 x 1 + 2 x 2 + x 3 + x 4 = - 2 x 1 - x 2 + 4 x 3 - x 4 = - 1 - 2 x 1 - 2 x 2 - 3 x 3 + x 4 = 9 x 1 + 5 x 2 - x 3 + 2 x 4 = 4

How to decide?

The extended matrix of the system is presented as:

x 1 x 2 x 3 x 4 3 2 1 1 1 - 1 4 - 1 - 2 - 2 - 3 1 1 5 - 1 2 - 2 - 1 9 4

The direct approach of the Gaussian method in this case involves reducing the extended matrix to a trapezoidal form using elementary transformations. This process is very similar to the process of eliminating unknown variables in coordinate form.

Matrix transformation begins with turning all elements zero. To do this, to the elements of the 2nd, 3rd and 4th lines we add the corresponding elements of the 1st line, which are multiplied by - a 21 a 11 = - 1 3 , - a 31 a 11 = - - 2 3 = 2 3 i n a - a 41 a 11 = - 1 3 .

Further transformations occur according to the following scheme: all elements in the 2nd column, starting from the 3rd row, become zero. This process corresponds to the process of eliminating a variable. In order to perform this action, it is necessary to add to the elements of the 3rd and 4th rows the corresponding elements of the 1st row of the matrix, which is multiplied by - a 32 (1) a 22 (1) = - 2 3 - 5 3 = - 2 5 and - a 42 (1) a 22 (1) = - 13 3 - 5 3 = 13 5:

x 1 x 2 x 3 x 4 3 2 1 1 | - 2 0 - 5 3 11 3 - 4 3 | - 1 3 0 - 2 3 - 7 3 5 3 | 23 3 0 13 3 - 4 3 5 3 | 14 3 ~

x 1 x 2 x 3 x 4 ~ 3 2 1 1 | - 2 0 - 5 3 11 3 - 4 3 | - 1 3 0 - 2 3 + (- 2 5) (- 5 3) - 7 3 + (- 2 5) 11 3 5 3 + (- 2 5) (- 4 3) | 23 3 + (- 2 5) (- 1 3) 0 13 3 + 13 5 (- 5 3) - 4 3 + 13 5 × 11 3 5 3 + 13 5 (- 4 3) | 14 3 + 13 5 (- 1 3) ~

x 1 x 2 x 3 x 4 ~ 3 2 1 1 | - 2 0 - 5 3 11 3 - 4 3 | - 1 3 0 0 - 19 5 11 5 | 39 5 0 0 41 5 - 9 5 | 19 5

Now we exclude the variable x 3 from the last equation - we add to the elements of the last row of the matrix the corresponding elements of the last row, which is multiplied by a 43 (2) a 33 (2) = - 41 5 - 19 5 = 41 19.

x 1 x 2 x 3 x 4 3 2 1 1 | - 2 0 - 5 3 11 3 - 4 3 | - 1 3 0 0 - 19 5 11 5 | 39 5 0 0 41 5 - 9 5 | 19 5 ~

x 1 x 2 x 3 x 4 ~ 3 2 1 1 | - 2 0 - 5 3 11 3 - 4 3 | - 1 3 0 0 - 19 5 11 5 | 39 5 0 0 41 5 + 41 19 (- 19 5) - 9 5 + 41 19 × 11 5 | 19 5 + 41 19 × 39 5 ~

x 1 x 2 x 3 x 4 ~ 3 2 1 1 | - 2 0 - 5 3 11 3 - 4 3 | - 1 3 0 0 - 19 5 11 5 | 39 5 0 0 0 56 19 | 392 19

Now let's apply the reverse method. In matrix notation, the transformation of the matrix is such that the matrix, which is marked in color in the image:

x 1 x 2 x 3 x 4 3 2 1 1 | - 2 0 - 5 3 11 3 - 4 3 | - 1 3 0 0 - 19 5 11 5 | 39 5 0 0 0 56 19 | 392 19

became diagonal, i.e. took the following form:

x 1 x 2 x 3 x 4 3 0 0 0 | a 1 0 - 5 3 0 0 | a 2 0 0 - 19 5 0 | a 3 0 0 0 56 19 | 392 19, where a 1, a 2, and 3 are some numbers.

Such transformations are analogous to the forward motion, only the transformations are performed not from the 1st line of the equation, but from the last. We add to the elements of the 3rd, 2nd and 1st lines the corresponding elements of the last line, which is multiplied by

11 5 56 19 = - 209 280, on - - 4 3 56 19 = 19 42 and on - 1 56 19 = 19 56.

x 1 x 2 x 3 x 4 3 2 1 1 | - 2 0 - 5 3 11 3 - 4 3 | - 1 3 0 0 - 19 5 11 5 | 39 5 0 0 0 56 19 | 392 19 ~

x 1 x 2 x 3 x 4 ~ 3 2 1 1 + (- 19 56) 56 19 | - 2 + (- 19 56) 392 19 0 - 5 3 11 3 - 4 3 + 19 42 × 56 19 | - 1 3 + 19 42 × 392 19 0 0 - 19 5 11 5 + (- 209 280) 56 19 | 39 5 + (- 209 280) 392 19 0 0 0 56 19 | 392 19 ~

x 1 x 2 x 3 x 4 ~ 3 2 1 0 | - 9 0 - 5 3 11 3 0 | 9 0 0 - 19 5 0 | - 38 5 0 0 0 56 19 | 392 19

11 3 - 19 5 = 55 57 and on - 1 - 19 5 = 5 19.

x 1 x 2 x 3 x 4 3 2 1 0 | - 9 0 - 5 3 11 3 0 | 9 0 0 - 19 5 0 | - 38 5 0 0 0 56 19 | 392 19 ~

x 1 x 2 x 3 x 4 ~ 3 2 1 + 5 19 (- 19 5) 0 | - 9 + 5 19 (- 38 5) 0 - 5 3 11 3 + 55 57 (- 19 5) 0 | 9 + 55 57 (- 38 5) 0 0 - 19 5 0 | - 38 5 0 0 0 56 19 | 392 19 ~

x 1 x 2 x 3 x 4 ~ 3 2 1 0 | - 11 0 - 5 3 0 0 | 5 3 0 0 - 19 5 0 | - 38 5 0 0 0 56 19 | 392 19

At the last stage, we add the elements of the 2nd row to the corresponding elements of the 1st row, which are multiplied by - 2 - 5 3 = 6 5.

x 1 x 2 x 3 x 4 3 2 1 0 | - 11 0 - 5 3 0 0 | 5 3 0 0 - 19 5 0 | - 38 5 0 0 0 56 19 | 392 19 ~

x 1 x 2 x 3 x 4 ~ 3 2 + 6 5 (- 5 3) 0 0 | - 11 + 6 5 × 5 3) 0 - 5 3 0 0 | 5 3 0 0 - 19 5 0 | - 38 5 0 0 0 56 19 | 392 19 ~

x 1 x 2 x 3 x 4 ~ 3 0 0 0 | - 9 0 - 5 3 0 0 | 5 3 0 0 - 19 5 0 | - 38 5 0 0 0 56 19 | 392 19

The resulting matrix corresponds to the system of equations

3 x 1 = - 9 - 5 3 x 2 = 5 3 - 19 5 x 3 = - 38 5 56 19 x 4 = 392 19, from where we find the unknown variables.

Answer: x 1 = - 3, x 2 = - 1, x 3 = 2, x 4 = 7.

Description of the algorithm for using the Gauss method for solving SLAEs with a divergent number of equations and unknowns, or with a degenerate matrix system

Definition 2If the underlying matrix is square or rectangular, then systems of equations may have a unique solution, may not have solutions, or may have an infinite number of solutions.

From this section we will learn how to use the Gaussian method to determine the compatibility or incompatibility of SLAEs, and also, in the case of compatibility, determine the number of solutions for the system.

In principle, the method of eliminating unknowns for such SLAEs remains the same, but there are several points that need to be emphasized.

Example 5

At some stages of eliminating unknowns, some equations turn into identities 0=0. In this case, the equations can be safely removed from the system and the direct progression of the Gaussian method can be continued.

If we exclude x 1 from the 2nd and 3rd equations, then the situation turns out to be as follows:

x 1 + 2 x 2 - x 3 + 3 x 4 = 7 2 x 1 + 4 x 2 - 2 x 3 + 6 x 4 = 14 x - x + 3 x + x = - 1 ⇔

x 1 + 2 x 2 - x 3 + 3 x 4 = 7 2 x 1 + 4 x 2 - 2 x 3 + 6 x 4 + (- 2) (x 1 + 2 x 2 - x 3 + 3 x 4) = 14 + (- 2) × 7 x - x + 3 x + x + (- 1) (x 1 + 2 x 2 - x 3 + 3 x 4) = - 1 + (- 1) × 7 ⇔

⇔ x 1 + 2 x 2 - x 3 + 3 x 4 = 7 0 = 0 - 3 x 2 + 4 x 3 - 2 x 4 = - 8

It follows from this that the 2nd equation can be safely removed from the system and the solution can be continued.

If we carry out the direct progression of the Gaussian method, then one or more equations can take the form of a certain number that is different from zero.

This indicates that the equation that turns into equality 0 = λ cannot turn into equality for any values of the variables. Simply put, such a system is inconsistent (has no solution).

Result:

- If, when carrying out the forward progression of the Gaussian method, one or more equations take the form 0 = λ, where λ is a certain number that is different from zero, then the system is inconsistent.

- If, at the end of the forward run of the Gaussian method, a system is obtained whose number of equations coincides with the number of unknowns, then such a system is consistent and defined: it has a unique solution, which is calculated by the reverse run of the Gaussian method.

- If, at the end of the forward run of the Gaussian method, the number of equations in the system turns out to be less than the number of unknowns, then such a system is consistent and has an infinite number of solutions, which are calculated during the reverse run of the Gaussian method.

If you notice an error in the text, please highlight it and press Ctrl+Enter

A system of linear algebraic equations (SLAE) with unknowns is given. It is required to solve this system: determine how many solutions it has (none, one or infinitely many), and if it has at least one solution, then find any of them.

Formally The problem is stated as follows: solve the system:

where are the coefficients and ![]() are known and the variables

are known and the variables ![]() - the desired unknowns.

- the desired unknowns.

A matrix representation of this problem is convenient:

where is a matrix composed of coefficients, and are column vectors of height.

It is worth noting that the SLAE may not be over the field of real numbers, but over the field modulo any number, i.e.:

— the Gaussian algorithm works for such systems too (but this case will be discussed below in a separate section).

Gaussian algorithm

Strictly speaking, the method described below is correctly called the "Gauss-Jordan elimination" method, since it is a variation of the Gauss method described by surveyor Wilhelm Jordan in 1887 (it is worth noting that Wilhelm Jordan is not the author of either the Jordan theorem curves, nor Jordan algebra - all these are three different scientists with the same name; in addition, apparently, the transcription “Jordan” is more correct, but the spelling “Jordan” has already been established in Russian literature). It is also interesting to note that simultaneously with Jordan (and according to some data even before him), this algorithm was invented by B.-I. Clasen.

Basic scheme

Briefly speaking, the algorithm is consistent exclusion variables from each equation until only one variable remains in each equation. If , then we can say that the Gauss-Jordan algorithm strives to reduce the system matrix to the identity matrix - after all, after the matrix has become the identity matrix, the solution to the system is obvious - the solution is unique and is specified by the resulting coefficients.

In this case, the algorithm is based on two simple equivalent transformations of the system: firstly, two equations can be exchanged, and secondly, any equation can be replaced by a linear combination of this row (with a non-zero coefficient) and other rows (with arbitrary coefficients).

On the first step The Gauss-Jordan algorithm divides the first row by a coefficient. Then the algorithm adds the first row to the remaining rows with such coefficients that their coefficients in the first column turn to zero - for this, obviously, when adding the first row to the -th one, you need to multiply it by . For each operation with a matrix (division by a number, adding another to one row), the corresponding operations are performed with the vector; in a sense, it behaves as if it were the th column of the matrix.

As a result, at the end of the first step, the first column of the matrix will become one (i.e., it will contain a one in the first row and zeros in the rest).

The second step of the algorithm is carried out similarly, only now the second column and the second row are considered: first, the second row is divided by , and then subtracted from all other rows with such coefficients as to reset the second column of the matrix.

Pivoting search

Of course, the diagram described above is incomplete. It works only if at each -th step the element is different from zero - otherwise we simply cannot achieve zeroing of the remaining coefficients in the current column by adding the -th row to them.

To make the algorithm work in such cases, there is precisely a process selecting a reference element(in English this is called in one word "pivoting"). It consists in rearranging the rows and/or columns of the matrix so that the desired element contains a non-zero number.

Note that rearranging rows is much easier to implement on a computer than rearranging columns: after all, when swapping two columns, you need to remember that these two variables swapped places, so that later, when restoring the answer, you can correctly restore which answer belongs to which variable . When rearranging rows, no such additional actions need to be performed.

Fortunately, for the method to be correct, row exchanges alone are sufficient (the so-called “partial pivoting”, as opposed to “full pivoting”, when both rows and columns are exchanged). But which string should you choose for exchange? And is it true that the search for a reference element should be done only when the current element is zero?

There is no general answer to this question. There are various heuristics, but the most effective of them (in terms of simplicity and impact) is this heuristic: the element with the largest modulus should be taken as the reference element, and it is necessary to search for the reference element and exchange with it Always, and not only when necessary (i.e. not only when ).

In other words, before executing the th phase of the Gauss-Jordan algorithm with the partial pivoting heuristic, it is necessary to find in the th column among the elements with indices from to maximum modulo, and exchange the row with this element with the th row.

Firstly, this heuristic will allow you to solve the SLAE, even if during the solution it happens that the element . Secondly, and quite importantly, this heuristic improves numerical stability Gauss-Jordan algorithm.

Without this heuristic, even if the system is such that at each phase the Gauss-Jordan algorithm will work, but in the end the accumulated error may turn out to be so huge that even for matrices of size about the error will exceed the answer itself.

Degenerate cases

So, if we stop at the Gauss-Jordan algorithm with partial pivoting, then, it is argued, if the system is non-degenerate (i.e. has a non-zero determinant, which means that it has a unique solution), then the algorithm described above will work completely and come to the unit matrix (the proof of this, i.e. that there will always be a non-zero support element, is not given here).

Let's now consider general case- when and are not necessarily equal. Let us assume that the support element was not found at the th step. This means that in the th column, all rows starting from the current one contain zeros. It is stated that in this case this th variable cannot be defined, and is independent variable(can take any value). In order for the Gauss-Jordan algorithm to continue its work for all subsequent variables, in such a situation you just need to skip the current -th column without increasing the number of the current row (we can say that we are virtually removing the -th column of the matrix).

So, some variables during the operation of the algorithm may turn out to be independent. It is clear that when the number of variables is greater than the number of equations, then at least the variables will be found to be independent.

In general, if at least one independent variable is found, then it can take on an arbitrary value, while the remaining (dependent) variables will be expressed through it. This means that when we work in the field of real numbers, the system potentially has infinitely many solutions(if we consider a SLAE modulo, then the number of solutions will be equal to this modulus to the power of the number of independent variables). However, one should be careful: one must remember that even if independent variables were discovered, nevertheless the SLAE may have no solutions at all. This happens when the remaining unprocessed equations (those that the Gauss-Jordan algorithm did not reach, i.e. these are equations in which only independent variables remain) have at least one non-zero free term.

However, it is easier to check this by explicitly substituting the found solution: assign zero values to all independent variables, assign the found values to the dependent variables, and substitute this solution into the current SLAE.

Implementation

Here we present an implementation of the Gauss-Jordan algorithm with the partial pivoting heuristic (choosing a reference element as the maximum in the column).

The system matrix itself is transmitted to the function input. The last column of the matrix is, in our old notation, the column of free coefficients (this was done for programming convenience - since in the algorithm itself, all operations with free coefficients repeat operations with the matrix).

The function returns the number of solutions to the system (, or) (infinity is indicated in the code by a special constant, which can be set to any large value). If at least one solution exists, then it is returned in the vector.

int gauss (vector< vector< double >> a, vector< double >& ans) ( int n = (int ) a.size () ; int m = (int ) a[ 0 ] .size () - 1 ; vector< int >< m && row< n; ++ col) { int sel = row; for (int i= row; i< n; ++ i) if (abs (a[ i] [ col] ) >abs (a[ sel] [ col] ) ) sel = i; if (abs (a[ sel] [ col] )< EPS) continue ; for (int i= col; i<= m; ++ i) swap (a[ sel] [ i] , a[ row] [ i] ) ; where[ col] = row; for (int i= 0 ; i< n; ++ i) if (i ! = row) { double c = a[ i] [ col] / a[ row] [ col] ; for (int j= col; j<= m; ++ j) a[ i] [ j] - = a[ row] [ j] * c; } ++ row; } ans.assign (m, 0 ) ; for (int i= 0 ; i< m; ++ i) if (where[ i] ! = - 1 ) ans[ i] = a[ where[ i] ] [ m] / a[ where[ i] ] [ i] ; for (int i= 0 ; i< n; ++ i) { double sum = 0 ; for (int j= 0 ; j< m; ++ j) sum + = ans[ j] * a[ i] [ j] ; if (abs (sum - a[ i] [ m] ) >EPS) return 0 ; ) for (int i= 0 ; i< m; ++ i) if (where[ i] == - 1 ) return INF; return 1 ; }The function supports two pointers - to the current column and the current row.

A vector is also created in which for each variable it is written in which row it should appear (in other words, for each column the number of the row in which this column is non-zero is written). This vector is needed because some variables may not have been “defined” during the solution (that is, these are independent variables that can be assigned an arbitrary value - for example, in the above implementation these are zeros).

The implementation uses the partial pivoting technique, searching for the row with the maximum modulus element, and then rearranging this row into position (although explicit row rearrangement can be replaced by exchanging two indices in some array, in practice this will not give a real gain, since the exchanges operations are wasted).

In the implementation, for the sake of simplicity, the current row is not divided by the leading element - so that, as a result, at the end of the algorithm, the matrix becomes not a unit matrix, but a diagonal one (however, apparently, dividing the row by the leading element allows us to somewhat reduce the resulting errors).

After finding a solution, it is inserted back into the matrix to check whether the system has at least one solution or not. If the verification of the found solution was successful, then the function returns or - depending on whether there is at least one independent variable or not.

Asymptotics

Let us estimate the asymptotic behavior of the resulting algorithm. The algorithm consists of phases, at each of which the following occurs:

Obviously, the first point has a smaller asymptotic behavior than the second. Note also that the second point is performed no more than once—as many times as there can be dependent variables in the SLAE.

Thus, final asymptotics the algorithm takes the form .

When this estimate turns into .

Note that when the SLAE is considered not in the field of real numbers, but in the field modulo two, then the system can be solved much faster - see this below in the section “Solving SLAE modulo”.

More accurate estimate of the number of actions

As we already know, the running time of the entire algorithm is actually determined by the time spent eliminating the current equation from the rest.

This can happen at each of the steps, with the current equation being added to all the others. When adding, work is done only with columns, starting with the current one. Thus, the total is operations.

Add-ons

Acceleration of the algorithm: dividing it into forward and reverse stroke

You can achieve a twofold acceleration of the algorithm by considering another version of it, a more classical one, when the algorithm is divided into forward and reverse phases.

In general, in contrast to the algorithm described above, it is possible to reduce the matrix not to diagonal form, but to triangular view- when all elements strictly below the main diagonal are equal to zero.

A system with a triangular matrix is solved trivially - first, the value of the last variable is immediately found from the last equation, then the found value is substituted into the penultimate equation and the value of the penultimate variable is found, and so on. This process is called in reverse Gaussian algorithm.

Straight stroke The Gaussian algorithm is an algorithm similar to the Gauss-Jordan algorithm described above, with one exception: the current variable is not excluded from all equations, but only from the equations after the current one. The result of this is actually not a diagonal, but a triangular matrix.

The difference is that forward stroke works faster Gauss-Jordan algorithm - since on average it makes half as many additions of one equation to another. The reverse stroke works in , which in any case is asymptotically faster than the forward stroke.

Thus, if , then this algorithm will perform already operations - which is half as much as the Gauss-Jordan algorithm.

Solution of SLAE modulo

To solve modulo SLAEs, you can use the algorithm described above; it retains its correctness.

Of course, now it becomes unnecessary to use any tricky techniques for selecting a reference element - it is enough to find any non-zero element in the current column.

If the module is simple, then no difficulties arise at all - divisions occurring during the operation of the Gaussian algorithm do not create any special problems.

Particularly remarkable module equal to two: For him, all operations with the matrix can be performed very efficiently. For example, subtracting one string from another modulo two is actually their symmetric difference (“xor”). Thus, the entire algorithm can be significantly accelerated by compressing the entire matrix into bit masks and operating only with them. Here is a new implementation of the main part of the Gauss-Jordan algorithm, using the standard C++ "bitset" container:

int gauss (vector< bitset< N>> a, int n, int m, bitset< N>& ans) (vector< int >where (m, - 1 ) ; for (int col= 0 , row= 0 ; col< m && row< n; ++ col) { for (int i= row; i< n; ++ i) if (a[ i] [ col] ) { swap (a[ i] , a[ row] ) ; break ; } if (! a[ row] [ col] ) continue ; where[ col] = row; for (int i= 0 ; i< n; ++ i) if (i ! = row && a[ i] [ col] ) a[ i] ^ = a[ row] ; ++ row; }As you can see, the implementation has even become a little shorter, despite the fact that it is much faster than the old implementation - namely, several times faster due to bit compression. It should also be noted that solving systems modulo two in practice works very quickly, since cases when it is necessary to subtract another from one row occur quite rarely (on sparse matrices, this algorithm can work in a time of the order of the square of the size rather than the cube).

If the module arbitrary(not necessarily simple), then everything becomes somewhat more complicated. It is clear that using the Chinese remainder theorem, we reduce the problem with an arbitrary module only to modules of the form “degree of prime”. [further text has been hidden because This is unverified information - perhaps the wrong way to solve ]

Finally, let's look at the question number of SLAE solutions modulo. The answer to this is quite simple: the number of solutions is equal to , where is the modulus and is the number of independent variables.

A little about the different ways to select a support element

As mentioned above, there is no clear answer to this question.

The "partial pivoting" heuristic, which consisted of finding the maximum element in the current column, works quite well in practice. It also turns out that it gives almost the same result as “full pivoting” - when the pivot element is searched among the elements of the entire submatrix - starting from the current row and from the current column.

But it's interesting to note that both of these max-element heuristics are actually very dependent on how the original equations were scaled. For example, if one of the equations of the system is multiplied by a million, then this equation will almost certainly be chosen as the leading one in the first step. This seems quite strange, so it is logical to move on to a slightly more complex heuristic - the so-called "implicit pivoting".

The heuristic of implicit pivoting is that the elements of different rows are compared as if both rows were normalized in such a way that the maximum element in them would be equal to one. To implement this technique, you simply need to maintain the current maximum in each row (or maintain each row so that the maximum in it is equal to one in absolute value, but this can lead to an increase in the accumulated error).

Improvement of the found answer

Because, despite various heuristics, the Gauss-Jordan algorithm can still lead to large errors on special matrices even of sizes of the order of - .

In this regard, the answer obtained by the Gauss-Jordan algorithm can be improved by applying some simple numerical method to it - for example, the simple iteration method.

Thus, the solution turns into a two-step one: first the Gauss-Jordan algorithm is executed, then some numerical method is performed that takes the solution obtained in the first step as initial data.

This technique allows us to somewhat expand the set of problems solved by the Gauss-Jordan algorithm with an acceptable error.

Literature

- William H. Press, Saul A. Teukolsky, William T. Vetterling, Brian P. Flannery. Numerical Recipes: The Art of Scientific Computing

- Anthony Ralston, Philip Rabinowitz. A first course in numerical analysis